#435 — The Last Invention

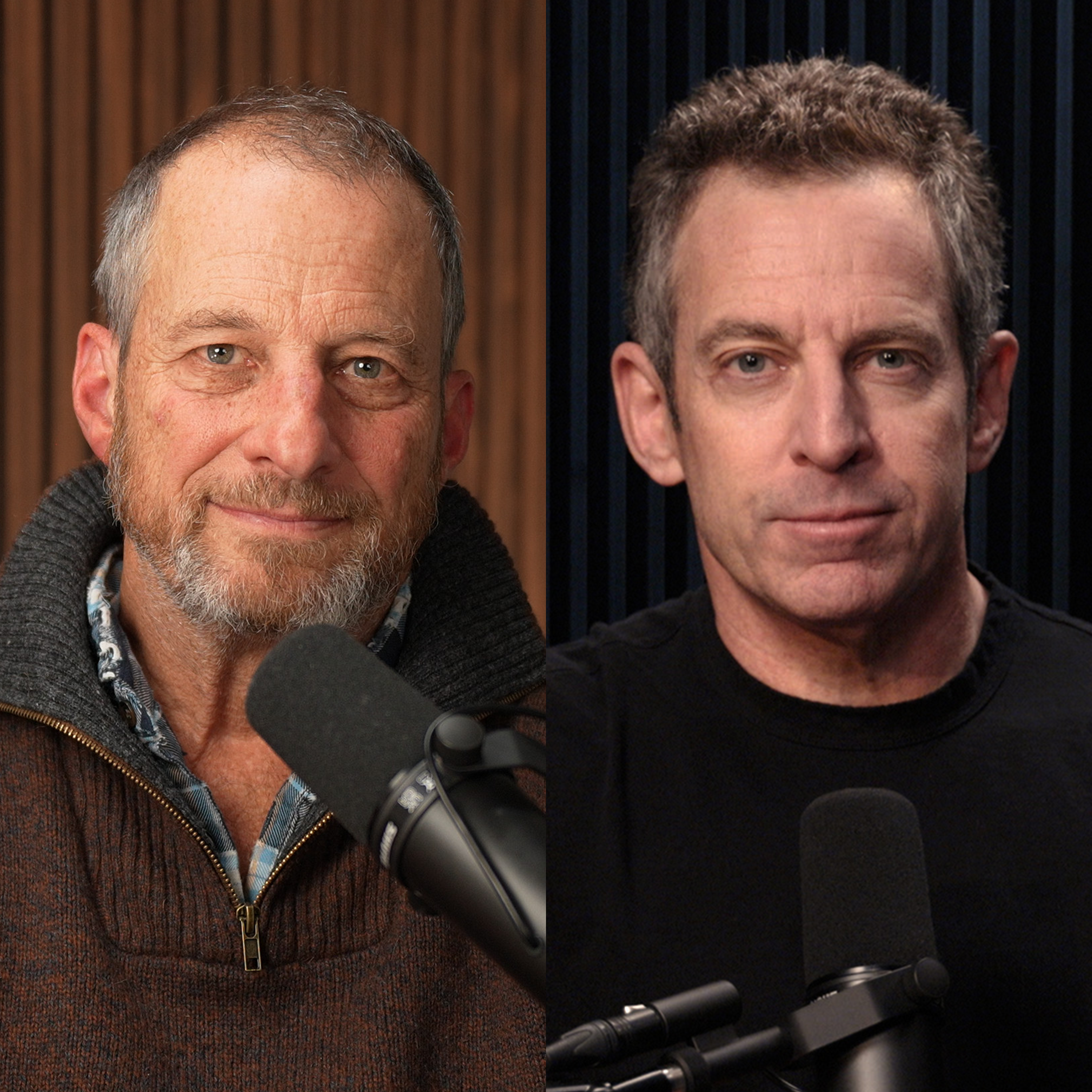

Sam Harris introduces the first episode of The Last Invention, a new podcast series on the hype and fear about the AI revolution, reported by Gregory Warner and Andy Mills.

Gregory Warner was a foreign correspondent in Russia and Afghanistan, and the East Africa bureau chief for NPR. He created and hosted the podcast Rough Translation. He also publishes stories on This American Life and in The New York Times. Andy Mills is a reporter and editor, formerly of The New York Times, where he helped create their audio department and shows like The Daily and Rabbit Hole.

The Last Invention is a limited run series with eight total episodes. You can find it anywhere you listen to podcasts, where episodes will be released weekly. You can sign up for their mailing list on Substack at https://longviewinvestigations.substack.com/, and you can also subscribe on their website at longviewinvestigations.com.

Press play and read along

Transcript

Speaker 1 Welcome to the Making Sense Podcast. This is Sam Harris.

Speaker 1 Well, in today's episode, we are previewing a new podcast series created by Andy Mills and Matt Bull

Speaker 1 on artificial intelligence. Andy and Matt have been making podcasts and reporting for at least 15 years or so.

Speaker 1 And they've won a bunch of awards, the Peabody,

Speaker 1 a Pulitzer.

Speaker 1

They've worked at outlets like Spotify and NPR and the New York Times. They also produce the Witch Trials of J.K.

Rowling, which I discussed on a previous episode of this podcast.

Speaker 1

And they have a new media company called Longview. And this series on AI, which is titled The Last Invention, is their first release.

And so we're previewing that here.

Speaker 1 I am interviewed in it, but there are many other people in there which are well worth listening to. Nick Bostrom, Jeffrey Hinton, Joshua Bengio, Reid Hoffman, Max Tegmark.

Speaker 1 Robin Hansen, Jaron Lanier, Tim Urban, Kevin Roos, and others. And

Speaker 1

this is really a great introduction to the topic. I mean, this is the, it's going to be a limited-run series of eight episodes.

And

Speaker 1 really, more than anything that I've done on this topic, this is the series you can share with your friends and family to get them to understand just what the fuss is about, whether it's the controversy on the side of being worried about this issue or on the side of being merely worried that we're going to do something

Speaker 1

regulatory or otherwise to slow our progress. They represent both sides of this controversy in a way that I really don't because I'm so in touch with one side of it.

And

Speaker 1 I think

Speaker 1

those who aren't in touch with that side are fairly crazy and doctrinaire at this point. So anyway, it's a great introduction.

to the topic.

Speaker 1 As far as the series itself, it's reported by Andy Mills and Gregory Warner. Andy, as I said,

Speaker 1 worked for the New York Times, and he actually helped create their audio department and their flagship podcast, The Daily, as well as Rabbit Hole.

Speaker 1 And Gregory Warner has been a foreign correspondent in Russia and Afghanistan and East Africa, where he was the bureau chief for NPR.

Speaker 1

And he created and hosted the podcast Rough Translation. He also publishes stories on This American Life and in the New York Times.

So anyway, you're in great hands with this series.

Speaker 1

I'm happy to preview it. I would love to give these guys a boost.

Again, their new media company is Longview.

Speaker 1 You can find out more about them over at longviewinvestigations.com, where you can also subscribe and get their newsletter.

Speaker 1 And also, Andy and Matt wanted me to say that they are hiring for this new company. They're looking for reporters, producers, editors, both for writing and for audio and video jobs.

Speaker 1

So if you have those skills, don't be shy. Again, it's LongviewInvestigations.com.

Anyway, that's it. Enjoy.

Thanks for listening.

Speaker 5 This is The Last Invention. I'm Gregory Warner, and our story begins with a conspiracy theory.

Speaker 7

So, Greg, last spring, I got this tip via the encrypted messaging app Signal. This is reporter Andy Mills from a former tech executive.

And he was making some pretty wild claims.

Speaker 7 And I wanted to talk to him on the phone, but he thought his phone was being tapped. But the next time I was out in California, I went to meet with him.

Speaker 9

I'm really kind of contending with like who I am in this moment. Up until a few months ago, I was an executive in Silicon Valley.

And yet here I am sitting in a living room with you guys

Speaker 9 talking about what I think is one of the most important things that needs to be discussed in the whole world, right? Which is

Speaker 9 the nature in which power is decided in our society.

Speaker 7 And he told me this story. that a faction of people within Silicon Valley had a plot to take over the United States government.

Speaker 7 And that the Department of Government Efficiency, Doge, under Elon Musk, was

Speaker 7 really phase one of this plan, which was to fire human workers in the government and replace them with artificial intelligence. And that over time, the plan was to replace all of the government.

Speaker 7 and have artificial intelligence make all the important decisions in America.

Speaker 9 I have seen both the nature of the threat from inside the belly of the beast, if it were, in Silicon Valley,

Speaker 9 and seen the nature of what's at stake.

Speaker 7 Now, this guy, his name is Mike Brock, and he had formerly been an executive in Silicon Valley. He'd worked alongside some big-name guys like Jack Dorsey, but he'd recently started a substack.

Speaker 7 And he told me that after he published some of these accusations, he had become convinced that people were after him i have reason to believe that i've been followed by private investigators um

Speaker 9 for that and other reasons i i traveled with private security when i went to dc and new york city last week

Speaker 7 he told me that he had just come back from washington dc where he had met with a number of lawmakers, including Maxine Waters, and debriefed them about this threat to American democracy.

Speaker 9

We are in a democratic crisis. This is a coup.

This is a slow-motion, soft coup.

Speaker 5 And so this faction, who is in this faction? What is this, like the Masons or something? Or is it like a secret cult?

Speaker 7 Well, he named several names,

Speaker 7

people who are recognizable figures in Silicon Valley. And he claimed that this quote-unquote conspiracy went all the way up to J.D.

Vance, the vice president.

Speaker 7 And he called the people who were behind this coup.

Speaker 9 The accelerationists.

Speaker 7 The accelerationists.

Speaker 7

It was a wild story. Yeah.

But, you know, some conspiracies turn out to be true. And it was also an interesting story.

So I started making some phone calls. I started looking into it.

Speaker 7

And some of his claims I could not confirm. Maxine Waters, for example, did not respond to my request for an interview.

Other claims started to somewhat fall apart.

Speaker 7 And of course, eventually Doge itself somewhat fell apart. Elon Musk ended up leaving the Trump administration.

Speaker 7 And for a while, it felt like, you know, it was one of those tips that just doesn't go anywhere.

Speaker 7 But in the course of all these conversations I was having with people close to artificial intelligence, I realized that there was an aspect of his story that wasn't just true, but in some ways, it didn't go quite far enough.

Speaker 7 Because there is indeed a faction of people in Silicon Valley who don't just want to replace government bureaucrats, but want to replace pretty much everyone who has a job with artificial intelligence.

Speaker 7 And they don't just think that the AI that they're making is going to upend American democracy. They think it is going to upend the entire world order.

Speaker 12 The world, as you know it, is over. It's not about to be over.

Speaker 13 It's over.

Speaker 14 I believe it's going to change the world more than anything in the history of mankind, more than electricity.

Speaker 7 But here's the thing: they're not doing this in secret.

Speaker 7 This group of people includes some of the biggest names in technology: you know, Bill Gates, Sam Altman, Mark Zuckerberg, most of the leaders in the field of artificial intelligence.

Speaker 12 AI is going to be better than almost all humans at almost all things.

Speaker 15 A kid born today will never be smarter than AI.

Speaker 17 It's the first technology that has no limit.

Speaker 5 So, wait, so you get a tip about like a slow-motion coup against the government, and then you realize, no, no, no, this is not just about the government. This is pretty much every human institution.

Speaker 7 Well, yes and no.

Speaker 7 Many of these accelerationists think that this AI that they're building is going to lead to the end of what we have come to think of as jobs, the end of what we have traditionally thought of as schools.

Speaker 7 Some would even say this could usher in the end of the nation state. But they do not see this as some sort of shadowy conspiracy.

Speaker 7 They think that this may end up literally being the best thing to ever happen to humanity.

Speaker 17 I've always believed that it's going to be the most important invention that humanity will ever make.

Speaker 18 Imagine that everybody will now in the future have access to the very best doctor in the world, the very best educator.

Speaker 1 The world will be richer and can work less and have more.

Speaker 12 This really will be a world of abundance.

Speaker 7 They predict that their AI systems are going to be the thing that helps us to solve the most pressing problems that humanity faces.

Speaker 12 Energy breakthroughs, medical breakthroughs.

Speaker 17 Maybe we can cure all disease with the help of AI.

Speaker 7 They think it's going to be this hinge moment in human history where soon we will be living to maybe be 200 years old, where maybe we'll be visiting other planets, where we will look back in history and think, oh my God, how did people live before this technology?

Speaker 17 Should be a kind of era of maximum human flourishing where we we travel to the stars and colonize the galaxy.

Speaker 12 I think a world of abundance really is a reality. I don't think it's utopian given what I've seen that the technology is capable of.

Speaker 5 So these are a lot of bold promises and they come from the people who are selling this technology. Why do they think that the AI that they are building is going to be so transformative?

Speaker 7 Well, the reason that they're making such grandiose statements and these bold predictions about the near future, it comes down to what it is they think that they're making when they say they're making AI.

Speaker 7 This is something that I recently called up my old colleague Kevin Roos to talk about. Kevin, how is it that you describe what it is that the AI companies are making?

Speaker 7 Am I right to say that they're essentially building like a supermind, like a digital super brain?

Speaker 13 Yes, that is correct.

Speaker 7 He's a very well-sourced tech reporter and a columnist at the New York Times.

Speaker 5 Also co-host of the podcast Hard Fork.

Speaker 7 And he says that the first thing to know is that this is far more of an ambitious project than just building something like chatbots.

Speaker 8 Essentially, many of these people believe that the human brain is just a kind of biological computer, that there is nothing special or supernatural about human intelligence, that we are just a bunch of neurons firing and learning patterns in the data that we encounter.

Speaker 8 And that if you could just build a computer that sort of simulated that, you could essentially create a new kind of intelligent being.

Speaker 8 Right.

Speaker 7 I've heard some people say that we should think of it less like a piece of software or a piece of hardware and more like a new intelligent species.

Speaker 11 Yes.

Speaker 8

It wouldn't be a computer program exactly. It wouldn't be a human exactly.

It would be this sort of digital supermind that could do anything a human could and more.

Speaker 7 The goal, the benchmark that the AI industry is working toward right now is something that they call AGI, artificial general intelligence.

Speaker 7 The general is the key part because a general intelligence isn't just really good at one or two or 20 or 100 things, but like a very smart person, can learn new things, can be trained in how to do almost anything.

Speaker 5 I guess this is where people get worried about jobs getting replaced because because suddenly you have a worker, like a lawyer or a secretary, and you can tell the AI to learn everything about that job.

Speaker 7

Exactly. I mean, that is what they're making.

And that's why there's a lot of concerns about what this could do to the economy.

Speaker 7 I mean, a true AGI could learn how to do any human job, factory worker, CEO, doctor. And as ambitious as that sounds, It has been like the stated on paper goal of the AI industry for a very long time.

Speaker 7 But when I was talking to Kevin Roos, he was saying that even just a decade ago, the idea that we would actually see it within our lifetimes, that was something that even in Silicon Valley was seen as like a pie in the sky dream.

Speaker 8 People would get laughed at inside the biggest technology companies for even talking about AGI. It seemed like trying to plan for, you know, something, building a hotel chain on Mars or something.

Speaker 8 It was like that far off in people's imagination.

Speaker 8 And now, if you say you don't think AGI is going to arrive until 2040, you are seen as like a hyper-conservative, basically Luddite in Silicon Valley.

Speaker 7 Well, I know that you are regularly talking to people at OpenAI and Anthropic and DeepMind and all these companies. What is their timeline at this point?

Speaker 7 When do they think they might hit this benchmark of AGI?

Speaker 8 I think the overwhelming...

Speaker 8 majority view among the people who are closest to this technology, both on the record and off the record, is is that it would be surprising to them if it took more than about three years for AI systems to become better than humans at at least almost all cognitive tasks.

Speaker 8 Some people say physical tasks, robotics, that's going to take longer.

Speaker 8 But the majority view of the people that I talk to is that something like AGI will arrive in the next two or three years, or certainly within the next five.

Speaker 5 I mean,

Speaker 6 holy shit.

Speaker 7 Holy shit.

Speaker 5 That is really soon.

Speaker 7 This is why there has been such insane amounts of money invested in artificial intelligence in recent years. This is why the AI race has been heating up.

Speaker 5 Right.

Speaker 4 This is to accelerate the path to AI.

Speaker 7 But this has also really brought more attention to this other group of people in technology, people who I personally have been following for over a decade at this point, who have dedicated themselves to try everything they can to stop these accelerationists.

Speaker 19 The basic description I would give to the current scenario is, if anyone builds it, everyone dies.

Speaker 7 Many of these people, like Eliezer Yudkowsky, are former accelerationists who used to be thrilled about the AI revolution and who for years now have been trying to warn the world about what's coming.

Speaker 19 I am worried about the AI that is smarter than us. I'm worried about the AI that builds the AI, that is smarter than us and kills everyone.

Speaker 7 There's also the philosopher Nick Bostrom. He published a book back in 2014 called Superintelligence.

Speaker 20 Now, a superintelligence would be extremely powerful. We would then have a future that would be shaped by the preferences of this AI.

Speaker 7 Not long after, Elon Musk started going around sounding this alarm.

Speaker 12 I have exposure to the most cutting-edge AI, and I think people should be really concerned about it.

Speaker 7 He went to MIT.

Speaker 12 I mean, with artificial intelligence, we are summoning the demon.

Speaker 7 Told them that creating an AI would be summoning a demon.

Speaker 12 AI is a fundamental risk to the existence of human civilization.

Speaker 7 Musk went as far as to have a personal meeting with President Barack Obama, trying to get him to regulate the AI industry and take the existential risk of AI seriously.

Speaker 7 But he, like most of these guys at the time, they just didn't really get anywhere. However, in recent years,

Speaker 7 that has started to change.

Speaker 22 The man dubbed the godfather of artificial intelligence has left his position at Google, and now he wants to warn the world about the dangers of the very product that he was instrumental in creating.

Speaker 7 Over the past few years, there have been several high-profile AI researchers, in some cases very decorated AI researchers.

Speaker 23 This morning, as companies race to integrate artificial intelligence into our everyday lives, one man behind that technology has resigned from Google after more than a decade.

Speaker 7 Who have been quitting their high-paying jobs, going out to the press, and telling them that this this thing that they helped to create poses an existential risk to all of us.

Speaker 10

It really is an existential threat. Some people say this is just science fiction.

And until fairly recently, I believed it was a long way off.

Speaker 7 One of the biggest voices out there doing this has been this guy, Jeffrey Hinton.

Speaker 7 He's like a really big deal in the industry and it meant a lot for him to quit his job, especially because he's a Nobel Prize winner for his work in AI.

Speaker 21 The risk I've been warning about the most,

Speaker 21 because most people think it's just science fiction, but I want to explain to people it's not science fiction, it's very real,

Speaker 21 is the risk that we'll develop an AI that's much smarter than us and it will just take over.

Speaker 7 And it's interesting when he's talking to journalists trying to sound this alarm, they're often saying, yes, we know that AI poses a risk if it leads to fake news, or like, what if someone like Vladimir Putin gets a hold of AI?

Speaker 24 It's inevitably, if it's out there,

Speaker 24 going to fall into the hands of people who maybe don't have the same values, the same motivations.

Speaker 7 He's telling them, no, no, no, no, no, this isn't just about it falling into the wrong hands. This is a threat from the technology itself.

Speaker 25 What I'm talking about is the existential threat of

Speaker 25

this kind of digital intelligence taking over from biological intelligence. And for that threat, all of us are in the same boat.

The Chinese, the Americans, the Russians, we're all in the same boat.

Speaker 25 We do not want digital intelligence to take over from biological intelligence.

Speaker 5 Okay, so what exactly is he worried about when he says it's an existential threat?

Speaker 7 Well, the simplest way to understand it is that Hinton and people like him, they think that one of the first jobs that's going to get taken after the industry hits their benchmark of AGI will be the job of AI researcher.

Speaker 7 And then the AGI will 24-7 be working on building another AI that's even more intelligent and more powerful.

Speaker 5 So you're saying AI would invent a better AI and then that AI would invent an even better AI.

Speaker 7 That is one way of saying it. Yes, exactly.

Speaker 7 The AGI now becomes the AI inventor and each AI is more intelligent than the AI before it, all the way up until you get from AGI, artificial general intelligence, to ASI, artificial super intelligence.

Speaker 26 The way I define it is this is a system that is single-handedly more intelligent, more competent at all tasks than all of humanity put together.

Speaker 7

I've now spoken to a number of different people who are trying to stop the AI industry from taking this step. People like Connor Leahy.

He's both an activist and a computer scientist.

Speaker 26 So it can do anything the entire humanity working together could do. So for example, you and me are generally intelligent humans, but we couldn't build semiconductors by ourselves.

Speaker 26 But humanity put together can't build a whole semiconductor supply chain. A superintelligence could do that by itself.

Speaker 5 So it's kind of like this. If AGI is as smart as Einstein, or way smarter than Einstein, I guess.

Speaker 7 An Einstein that doesn't sleep, that doesn't take bathroom breaks, right?

Speaker 5

And lives forever and has memory for everything. Exactly.

ASI,

Speaker 5 that is smarter than a civilization.

Speaker 7 A civilization of Einstein's. That's how the theory goes, right? Like you have the ability now

Speaker 7 to do in hours or minutes things that take a whole country or maybe even the whole world a century to do.

Speaker 7 And some people believe that if we were to create and release a technology like that, there'd be no coming back.

Speaker 7 Humans would no longer be the most intelligent species on earth and we wouldn't be able to control this thing.

Speaker 26 By default, these systems will be more powerful than us, more capable of gaining resources, power, control, et cetera.

Speaker 26 And unless they have a very good reason for keeping humans around, I expect that by default, they will simply not do so. And the future will belong to the machines, not to us.

Speaker 7 And they think that we have one shot, essentially.

Speaker 5 One shot. Like one shot, meaning we don't, we can't update the app once we release it.

Speaker 7 Once this cat is out of the bag, once this genie is out of the bottle, whatever medicine is.

Speaker 5 Once this program is out of the lab, as it were.

Speaker 7 Exactly. Unless it is 100%

Speaker 7 aligned with what humans value, unless it is somehow placed under our control, they believe it will eventually lead to our demise.

Speaker 5 I guess I'm scared to ask this, but like, how? Would this look like a global disaster? Or are we talking about it getting control of CRISPR and releasing a global pandemic?

Speaker 7 Yes, there are those fears for sure.

Speaker 7 I want to get more into all the different scenarios that they foresee in a future episode, but I think the simplest one to grasp is just this idea that a superior intelligence is rarely, if ever, controlled by an inferior intelligence.

Speaker 7 And we don't need to imagine a a future where these ASI systems hate us or they like break bad or something.

Speaker 7 The way that they'll often describe it is that these ASI systems, as they get further and further out from human-level intelligence, after they evolve beyond us, that they might just not think that we're very interesting.

Speaker 5 I mean, in some ways, hatred would be flattering, like if they saw us as the enemy and we were in some battle between humanity and the AI, which we've seen from so many movies.

Speaker 5 But what you're describing is just

Speaker 5 like indifference.

Speaker 7 Right.

Speaker 7 I mean, one of the ways that people will describe it is that like, if you're going to build a new house, of all the concerns you might have in the construction of that house, you're not going to be concerned about the ants that live on that land that you've purchased.

Speaker 7 And they think that one day the ASIs may come to see us the way that we currently see ants.

Speaker 28 You know, it's not like we hate ants.

Speaker 28 Some people really love ants, But humanity as a whole has interests. And if ants get in the way of our interests, then we'll fairly happily kind of destroy them.

Speaker 7 This is something I was talking to William McCaskill about. He is a philosopher and also the co-founder of this movement called the Effective Altruists.

Speaker 28 And the thought here is, if you think of AI as we're developing, as like this new species, that species, as its capabilities, keep increasing.

Speaker 28 So the argument goes, we'll just be more competitive than the human species. And so we should expect it to end up with all the power.

Speaker 28 That doesn't immediately lead to human extinction, but at least it means that our survival might be as contingent on the goodwill of those AIs as the survival of ants are on the goodwill of human beings.

Speaker 5 If the future is closer than we think, and if one day soon there is a at least reasonable probability that super intelligent machines will treat us like we treat bugs.

Speaker 5 Then what do the folks worried about this say that we should do?

Speaker 7 Well, there's essentially two different approaches to the perceived threat.

Speaker 7 Some people who are worried about this, they simply say that we need to stop the AI industry from going any further and we need to stop them right now.

Speaker 26 We should not build ASI.

Speaker 7 Just don't do it.

Speaker 26

We're not ready for it and it shouldn't be done. Further than that, it's not just, I am not trying to convince people to not do it out of the goodness of their heart.

I think it should be illegal.

Speaker 26 It should be logically illegal for people and private corporations to attempt even to build systems that could kill everybody.

Speaker 5 What would that mean to make it illegal? Like, how do you enforce that?

Speaker 7 Yeah, I mean, some accelerationists joke, like, what are you going to outlaw algebra?

Speaker 5

Right. You don't need uranium in a secret center.

You can just build it with code.

Speaker 7

Right. But you do need data centers.

And you could, you know, put in laws and restrictions that stop these AI companies from building any more data centers and a number of other laws.

Speaker 7 There are some people, though, who go even further and say that nuclear-armed states like the U.S. should be willing to threaten to attack these data centers if these AI companies like OpenAI

Speaker 7 are on the verge of releasing an AGI to the world.

Speaker 5 Wait, so even bombing data centers that are in Virginia or in Massachusetts? I mean, like...

Speaker 7 They see it as that great of a threat.

Speaker 7 They believe that on the current path we're on, there is only one outcome, and that outcome is the end of humanity.

Speaker 5 If we build it, then we die.

Speaker 7

Exactly. And this is why many people have come to calling this faction the AI Doomers.

The accelerationists like to call doomer.

Speaker 29 That was a... kind of pejorative coined by them and very successfully, I must say.

Speaker 26 I disavow the doomer label because I don't see myself that way.

Speaker 7

Some of them have embraced the name Doomer. Others of them dislike the name Doomer.

They often will call themselves the realists, but in my reporting, everyone calls themselves the realists.

Speaker 7 So I didn't think that would work.

Speaker 26 Like, I consider it to be realistic, to be calibrated.

Speaker 7 And one of the reasons that they balk at the name is that they feel like it makes them come off as a bunch of anti-technology Luddites, when in fact, many of them work in technology, many of them love technology.

Speaker 7 People like Connor Leahy, I mean, they even like AI as it is right now. I mean, he uses ChatGPT.

Speaker 7 He just tells me that from everything that he sees, where it's headed, where it's going, we have no choice but to stop them.

Speaker 26 If it turns out tomorrow, there's new evidence that actually all of these problems I'm worried about are less of a problem than I think they are, I would be the most happy person in the world.

Speaker 7 Like this would be ideal.

Speaker 5

All right. So one approach is we stop AI in its tracks.

It's illegal to proceed down this road we're on.

Speaker 5 But that seems challenging to do given how much is it already invested in AI and frankly how much potential value there is in the progress of this technology. So what's the alternative?

Speaker 7 Well, there's another group of people who are pretty much equally worried about the potentially catastrophic effects of making an AGI and it leading to an ASI.

Speaker 7 But they agree with you that we probably can't stop it. And some of them would go as far as to say we probably shouldn't stop it because there really is a lot of potential benefits in AGI.

Speaker 7 So what they're advocating for is is that our entire society, essentially our entire civilization, needs to get together and try

Speaker 7 in every way possible to get prepared for what's coming.

Speaker 29 How do we find the win-win outcome here?

Speaker 7 One of the advocates for this approach that I talked to is Liv Beree. She is a professional poker player and also a game theorist.

Speaker 29 Our job now, right now, whether you're someone building it or someone who is observing people build it or just a person living on this planet because this affects you too, is to collectively figure out how we unlock this narrow path because it is a narrow path we need to navigate.

Speaker 28 We should be really focusing a lot right now on trying to understand as concretely as possible what are all the obstacles we need to face along the way and what can we be doing now to ensure that that transition goes well.

Speaker 7 This faction, which includes figures like William McCaskill, what they want to see is the thinking institutions of the world, you know, the universities, research labs, the media, join together to try and solve all of the issues that we're going to face over the next few years as AGI approaches.

Speaker 5 So you mean not just leave this up to the tech companies?

Speaker 7

Exactly. They want to see, you know, politicians brainstorming ways to help their constituents in the event that...

the bottom falls out of the job market, right?

Speaker 5 Right. Or prepare communities to have no jobs, I guess.

Speaker 7 Some of them go that far, right? Like universal basic income. And they also want to see governments around the world, especially in the U.S., start to regulate this industry.

Speaker 7 What are the concrete steps we could take in the next year to get ready?

Speaker 27 So we'd like regulations that say when a big company produces a new, very powerful thing, they run tests on it and they tell us what the tests were.

Speaker 7 Jeffrey Hinton, after he quit Google, he converted to this approach and he was talking to me about the kinds of regulations that he wants to see.

Speaker 27 And we'd like things like whistleblower protection.

Speaker 27 So if someone in one of these big companies discovers the company is about to release something awful which hasn't been tested properly, they get whistleblower protections.

Speaker 27 Those are to deal, though, with more short-term threats.

Speaker 7 Okay, but what about the long-term threats? What about this idea that AI poses this existential threat? What is it that we could do to prevent that?

Speaker 27 Okay, so I can tell you what we should do about AI itself taking over. There's one good good piece of news about this, which is that no government wants that.

Speaker 27 So governments will be able to collaborate on how to deal with that.

Speaker 7 So you're saying that China doesn't want AI to take over their power and authority. The US doesn't want some technology to take over their power and authority.

Speaker 7 And so you see a world where the two of them can work together to make sure that we keep it under control.

Speaker 30 Yes.

Speaker 27 In fact, China doesn't want an AGI to take over the US government because they know it will pretty soon spread to China.

Speaker 27 So we could have a system where there were research institutes in different countries that were focused on how are we going to make it so that it doesn't want to take over from people.

Speaker 27 It will be able to if it wants to, so we have to make it not want to.

Speaker 27 And the techniques you need for making it not want to take over are different from the techniques you need for making it more intelligent.

Speaker 27 So even though the countries won't share how to make it more intelligent, they will want to share research on how do you make it not want to take over.

Speaker 7 And over time, I've come to calling the people who are a part of this approach the Scouts.

Speaker 5 Like the Boy Scouts.

Speaker 7

Be prepared. Like the Boy Scouts.

Yes, exactly. And it turned out that after I ran this name by William McCaskill.

So what if I called your camp the Scouts?

Speaker 28 So a little fun fact about myself is I was a Boy Scout for 15 years.

Speaker 7 He actually was a Boy Scout. And so I thought, okay, the Scouts.

Speaker 28 Maybe that's why I've got this approach.

Speaker 7 But the key thing about the scouts approach if it's going to work is they believe that we cannot wait that we have to start getting prepared and we have to start right now this is something that i was talking about with sam harris the reasons to be excited and to want to go go go are all too obvious except for the fact that we're running all of these other risks and we haven't figured out how to mitigate them Sam is a philosopher.

Speaker 7 He's an author. He hosts the podcast Making Sense and he's probably the most impassioned scout that I know personally.

Speaker 3 There's every reason to think that we have something like a tightrope walk to perform successfully now, like in this generation, right? Not 100 years from now.

Speaker 3 And we're edging out onto the tightrope in a style of movement that is not careful.

Speaker 3 If you knew you had to walk a tightrope and you got one chance to do it, and you've never done this before, like what is the attitude of that first step and that second step?

Speaker 11 Right.

Speaker 3 We're like racing out there in the most chaotic way.

Speaker 3

Yeah. And just like, we're off balance already.

We're looking over our shoulder fighting with the last asshole we met online. And we're leaping out there.

Speaker 7

Right. And you've been on this for a long time.

In 2016, I remember you did this big TED talk. Yeah.

I've watched it at the time. It had millions of views.

Speaker 7 And you were essentially saying the same thing. You were trying to get people to realize that we have a tightrope to walk and we have to walk it right now.

Speaker 3 Well, I wanted to help sound the alarm about the inevitability of this collision, whatever the timeframe. We know we're very bad predictors as to how quickly certain breakthroughs can happen.

Speaker 3 So Stuart Russell's point, which I also cite in that talk, which I think is a quite brilliant changing of frame, he says, okay, let's just admit it is probably 50 years out, right?

Speaker 3 Let's just change the concepts here.

Speaker 3 Imagine we received a communication from elsewhere in the galaxy, from an alien civilization that was obviously much more advanced than we are, because they're talking to us now.

Speaker 18 And the communication reads

Speaker 3 thus,

Speaker 3 people of Earth, we will arrive on your lowly planet in 50 years.

Speaker 1 Get ready.

Speaker 3 Just think of how galvanizing that moment would be.

Speaker 3 That is what we're building: that collision and that new relationship.

Speaker 4 Coming up on the last invention.

Speaker 16 Why is all the worry about the technology going badly wrong? And why are people not worried enough about it not happening?

Speaker 4 The accelerationists respond to these concerns.

Speaker 30 Existential risk for humanity is a portfolio.

Speaker 30 We have nuclear war, we have pandemic, we have asteroids, we have climate change, we have a whole stack of things that could actually in fact have this existential risk.

Speaker 7 So you're saying that it's going to decrease our overall existential risk, even as it itself may pose to some degree an existential risk?

Speaker 1 Yes.

Speaker 4 Researchers tell us what they saw that changed their minds.

Speaker 31

I was a person selling AI as a great thing. for decades.

I convinced my own government to invest hundreds of millions of dollars in AI.

Speaker 31 All my self-worth was on the plan that it would be positive for society.

Speaker 1 And

Speaker 1 I was wrong.

Speaker 4 I was wrong. And we go back to where the technology fueling this debate began.

Speaker 8 Basically, this is the holy grail of the last 75 years of computer science.

Speaker 26 It is the genesis, the UR, like philosopher's stone of the field of computer science.

Speaker 4 The Last Invention is produced by Longview. To hear episode two right now, search for The Last Invention wherever you get your podcasts and subscribe to hear the rest of the series.

Speaker 4 Thank you for listening and our thanks to Sam.

Speaker 6 We'll see you soon.