'The Interview': The Culture Wars Came for Wikipedia. Jimmy Wales Is Staying the Course.

Press play and read along

Transcript

Speaker 1 real world experience across a range of industries, Deloitte helps recognize how a breakthrough in aerospace might ripple into healthcare, how an innovation in agriculture could trickle into retail or biotech or even manufacturing.

Speaker 1 Is it clairvoyance? Hardly.

Speaker 1 It's what happens when experienced, multidisciplinary teams and innovative tech come together to offer clients bold new approaches to address their unique challenges, helping them confidently navigate what's next.

Speaker 1 Deloitte Together makes progress.

Speaker 2 From the New York Times, this is the interview. I'm Lulu Garcia Navarro.

Speaker 2 As one of the most popular websites in the world, Wikipedia helps define our common understanding of just about everything.

Speaker 2 But recently, the site has gone from public utility to a favorite target of Elon Musk, congressional Republicans, and MAGA influencers, who all claim that Wikipedia is biased.

Speaker 2 In many ways, those debates over Wikipedia are a microcosm of bigger discussions we're having right now about consensus, civil disagreement, shared reality, truth, facts, all those little, easy topics.

Speaker 2 A bit of history. Wikipedia was founded back in the Paleolithic era of the internet in 2001 by Larry Sanger and Jimmy Wales.

Speaker 2 It was always operated as a non-profit, and it employs a decentralized system of editing by volunteers, most of whom do so anonymously.

Speaker 2 There are rules over how people should engage on the site, cordially, and how changes are made, transparently.

Speaker 2 And it's led to a culture of civil disagreement that has made Wikipedia what some have called the last best place on the internet.

Speaker 2 Now, with that culture under threat, Jimmy Wales has written a book called The Seven Rules of Trust, trying to take the lessons of Wikipedia's success and apply them to our increasingly partisan, trust-depleted world.

Speaker 2 And I have to say, I did come in skeptical of his prescriptions, but I left hoping he's right. Here's my conversation with Wikipedia co-founder, Jimmy Wales.

Speaker 2 I wanted to talk to you because I think this is a very tenuous moment for trust, and your new book is all about that.

Speaker 2 In it, you sort of lay out what you call the seven rules of trust based on your work at Wikipedia. And we'll talk about all those, as well as some of the threats and challenges to Wikipedia.

Speaker 2 But big picture, how would you describe our current trust deficit?

Speaker 3 I think I draw a distinction between

Speaker 3 what's going on maybe with politics and journalism, the culture wars and all of that, and day-to-day life. Because I think in day-to-day life, people still do trust each other.

Speaker 3 People generally think most people are basically nice.

Speaker 3 And we're all human beings bumping along on the planet, trying to do our best. And obviously, there are definitely people who aren't trustworthy.

Speaker 3 But the crisis we see in politics, trust in politicians, trust in journalism, trust in business, that is coming from other places and is something that we can fix.

Speaker 2 One of the reasons why you can be an authority on this is because you created something that scores very high on trust. You have built something that people sort of want to engage with.

Speaker 3 Yeah, I mean, I do think

Speaker 3 Wikipedia isn't as good as I want it to be. And so I think that's part of why people do have a certain amount of trust for us, because we try to be really transparent.

Speaker 3 You know, you see the notice at the top of the page sometimes that says the neutrality of this page has been disputed, or the following section doesn't cite any sources. People like that.

Speaker 3 Not many places these days will tell you, hey, we're not so sure here. And it shows that the public does have a real desire for

Speaker 3 unbiased, sort of neutral information.

Speaker 3 They want to trust. They want the sources.

Speaker 3 They want you to prove what you're saying and so forth.

Speaker 2 How does Wikipedia define a fact?

Speaker 3 Basically, we're very old-fashioned about this sort of thing. What we look for is good quality sources.

Speaker 3 So we like peer-reviewed scientific research, for example, as opposed to populist tabloid reports. You know, we look for quality magazines, newspapers, etc.

Speaker 3 So we don't typically treat a random tweet as a fact.

Speaker 3 And so we're pretty boring on that regard.

Speaker 2 Yeah, it's sort of like the publication that you cite gets cited by other reputable sources, that it issues corrections when it gets things wrong.

Speaker 3 It's all the old-fashioned sort of good stuff.

Speaker 3 And I think it's important to say when we look at different sources, they will often come to things from a different perspective or a different political point of view.

Speaker 3 That doesn't diminish the quality of the source. So, for example, I live here in London in the UK, and we have the Telegraph, which is a generally right-leaning but quality newspaper.

Speaker 3 We have The Guardian, generally left-leaning but quality newspaper. Hopefully, the facts, as you read the articles and you glean through it, the facts should be reliable and solid.

Speaker 3 But you have to be very careful as an editor to tease out, okay, what are the facts that are agreed upon here? And what are the things that are opinions on those facts?

Speaker 3 And that's, you know, that's an editorial job. It's never perfect and it's never easy.

Speaker 2

And Wikipedia also is famously open source. It's decentralized, and essentially, it's run by thousands of volunteer editors.

You don't run Wikipedia, we should say.

Speaker 3 It runs me.

Speaker 2 How do those editors fix disputes when they don't agree on what facts to be included or on how something is written? How do you negotiate those differences?

Speaker 3 Aaron Powell, Jr.: Well, in the best cases, what happens and what should happen always is take a controversial issue like abortion. Obviously, if you think about

Speaker 3 a kind and thoughtful Catholic priest and a kind and thoughtful Planned Parenthood activist, they're never going to agree about abortion, but probably they can come together, because I said they're kind and thoughtful, and say, okay, but we can report on the dispute.

Speaker 3 So rather than trying to say, abortion is a sin or abortion is a human right, you could say,

Speaker 3 Catholic Church position is this, and the critics have responded thusly. You'll start to hear a little of the Wikipedia style, because I believe that that's what a reader really wants.

Speaker 3

They don't want to come and get one side of the story. They want to come and say, okay, wait, hold on.

I actually want to understand what people are arguing about. I want to understand both sides.

Speaker 3 What are the best arguments here?

Speaker 2 Yeah, and basically, every page has what's called a talk tab where you can see the history of the discussions and the disputes, which relates to another principle of the site, which is transparency.

Speaker 2 You can look at everything and see who did what and what their reasoning was.

Speaker 3 Yeah, exactly. So, you know, oftentimes if you see something in Wikipedia and you think, huh, okay, well, that's, why does it say that?

Speaker 3 Often you'll be able to go on the talk page and read sort of what the debate was and how it was. And you can weigh in there and you can join and say, oh, actually, I still think you've got it wrong.

Speaker 3

Here's some more sources. Here's some more information.

Maybe propose a compromise, that sort of thing. And in my experience, it turns out that a lot of...

Speaker 3 pretty ideological people on either side are actually more comfortable doing that because they feel confident in their beliefs.

Speaker 3 I think it's the people who, and you'll find lots of them on Twitter, for example, they're not that confident in their own values and their own belief system.

Speaker 3 And they feel fear or panic or anger if someone's disagreeing with them rather than saying, huh, okay, look, that's different from what I think.

Speaker 3 Let me explain my position, which is where you're more intellectually grounded person will come from.

Speaker 2 What you're saying is supported actually by a study about Wikipedia that came out in the science journal Nature in 2019. It's called The Wisdom of Polarized Crowds.

Speaker 2 Perhaps counterintuitively, it says that politically contentious Wikipedia pages end up being of higher quality, meaning that they're more evidence-based, they have more consensus around them.

Speaker 2 But I do want to ask about the times when consensus building isn't necessarily easy as it relates to specific topics on Wikipedia. Some pages, they have actually restricted editing privileges.

Speaker 2 So the Arab-Israeli conflict, climate change, abortion, unsurprising topics there. Why are those restricted and why doesn't the wisdom of polarized crowds work for those subjects?

Speaker 3 Well, typically the subjects that are restricted are

Speaker 3 we try to keep that as short as we can.

Speaker 3 The most common type of case is if something's really big in the news or if some big online influencer says, ah, Wikipedia is wrong, go and do something about it. And

Speaker 3 we get a rush of people who don't understand our culture, don't understand the rules, and they're just vandalizing or they're just sort of being rude and so on and so forth.

Speaker 3

And we just, as a calming down, just like, okay, hold on, just slow down. We're going to protect the page.

And then there are pages where

Speaker 3 the most common type of protection we call semi-protection, which just means you have to have had an account for, I forget the exact numbers, it's something like four days and you have made 10 edits without getting banned.

Speaker 3 Now, typically, and this is what's surprising to a lot of people about wikipedia like 99 of the pages maybe more than 99

Speaker 3 you can edit without even logging in and it goes live instantly that's like mind-boggling but it kind of points to the fact that most people are basically nice most people are trustworthy people don't just come by and vandalize wikipedia um and often if they do it's because they're just experimenting or they didn't believe that would like they're like oh my god it actually went live and i didn't know i was going to do that it's like yeah please don't do that again

Speaker 2 This brings me to some of the challenges.

Speaker 2 Wikipedia, while it has created this very trustworthy system, it is under attack from a lot of different places. And one of Wikipedia's sort of superpowers can also be seen as a vulnerability, right?

Speaker 2 The fact that it is created by human editors. And human editors can be threatened, even though they're supposed to be anonymous.

Speaker 2 You've had editors doxed, pressured by governments to doctor information. Some have had to flee their home countries.

Speaker 2 I'm thinking of what's happened in Russia and India, where those governments have really taken aim at Wikipedia. Would you say this is an expanding problem?

Speaker 3 Yeah, I would. I think that we are seeing all around the world

Speaker 3 a rise of authoritarian impulses towards censorship, towards controlling information.

Speaker 3 And very often, these come, you know, as a wolf in sheep's clothing because it's all about protecting the children or whatever it might be that you know you move forward in these kind of control ways but at the same time you know the wikipedians are very resilient and they're very brave and one of the things that we believe is that in many many cases what's happened is a real lack of understanding by politicians and leaders of how Wikipedia works.

Speaker 3 A lot of people really have a very odd assumption that it's somehow controlled by the Wikimedia Foundation, which is the charity that I set up that owns and operates the website.

Speaker 3

Therefore, they think it's possible to pressure us in ways that it's actually not possible to pressure us. The community has real intellectual independence.

But yeah, I do worry about it.

Speaker 3 I mean, it's always something that weighs very heavily on us is volunteers who are in dangerous circumstances. And how do they remain safe is like critically important.

Speaker 2 I want to bring up something that just happened here in the U.S.

Speaker 2 In August, James Comer and Nancy Mace, two Republican representatives from the House Oversight Committee, wrote a letter to Wikimedia requesting records, communication, analysis on specific editors, and also any reviews on bias regarding the state of Israel in particular.

Speaker 2 Their reason, and I'm going to quote here, is because they are investigating the efforts of foreign operations and individuals at academic institutions subsidized by U.S.

Speaker 2

taxpayer dollars to influence U.S. public opinion.

Aaron Powell, so can you tell me your reaction to that query?

Speaker 3 Yeah.

Speaker 3 I mean, you know, we've given a response to the parts of that that were reasonable. I mean, what we feel like is there's a deep misunderstanding or lack of understanding about how Wikipedia works.

Speaker 3 You know, ultimately,

Speaker 3 the idea that something being biased is a proper and fit subject for a congressional investigation is frankly absurd.

Speaker 3 And so, you know, in terms of asking questions about cloak and dagger, whatever, we're not going to have anything useful to tell them.

Speaker 3 I'm like, I know the Wikipedians, they're like a bunch of nice geeks.

Speaker 2 Yeah, I mean, the Heritage Foundation here in the United States, which was the architect of Project 2025,

Speaker 2 have said that they want to dox your editors. I mean, how do you protect people from that?

Speaker 3 I mean, it's embarrassing for the Heritage Foundation. I remember when they were intellectually respectable, and

Speaker 3 that's a shame that if that's what they think is the right way forward, they're just badly mistaken.

Speaker 2 But it does seem that there is this movement on the right to target Wikipedia over these types of concerns. And I'm wondering why you think that's happening.

Speaker 3

I mean, it's hard to say. There's a lot of different motivations, a lot of different people.

Some of it would be, you know, genuine concern if they see that maybe Wikipedia is biased.

Speaker 3 Or, you know, I have seen, for example, Elon Musk has said, Wikipedia is biased because they have this really strong rules about only citing mainstream media and the mainstream media is biased. Okay.

Speaker 3 I mean, that's an interesting question, interesting criticism,

Speaker 3 certainly I think worthy of some reflection by everyone, the media and so on and and so forth. But it's hardly, you know, it's hardly news to anybody and not actually that interesting.

Speaker 3 Then other people, you know, in various places around the world, not speaking just of the U.S., but, you know, facts are

Speaker 3 threatening. And if you and your policies are at odds with the facts, then you may find it very uncomfortable for people to simply explain the facts.

Speaker 3

And I don't know, that's always going to be a difficulty. But we're not about to say, gee, you know, maybe science isn't valid after all.

Maybe the COVID vaccine killed half the population.

Speaker 3

No, it didn't. Like, that's crazy, and we're not going to print that.

And so they're going to have to get over it.

Speaker 2 I want to talk about a recent example of a controversy surrounding Wikipedia, and that's the assassination of Charlie Kirk.

Speaker 2 You know, Senator Mike Lee called Wikipedia wicked because of the way it had described Kirk on its page as a far-right conspiracy theorist, among other complaints that they had about the page.

Speaker 2 And I went to look at the time that we're speaking, and that description is now gone from Wikipedia. Those on the left would say that that description was accurate.

Speaker 2 Those on the right would say that that description was biased all along. How do you see that, that tension?

Speaker 3 Well, I mean, I think

Speaker 3

the correct answer is you have to address all of that. You have to say, look, this was a person.

I think the least controversial thing you could say about Charlie Kirk is that he was controversial.

Speaker 3 I don't think anybody would dispute that. And to say, like, okay, this was a figure who was a great hero to many people and treated as a demon by others.

Speaker 3 He had these views, many of which are out of step with, say, mainstream scientific thinking, many of which are very much in step with religious thinking and so on and so forth.

Speaker 3 And those are the kinds of things that if we do our job well, which I think we have in this case, we're going to describe all of that. Like, maybe you don't know anything about Charlie Kirk.

Speaker 3 You just heard, oh my God, this man was assassinated. Who was he? What's this all about? Well, you should come and learn all that.

Speaker 3 You should learn like who his supporters were and why they supported him. And what are the arguments he put forward? And what are the things he said that upset people?

Speaker 3 That's just part of learning what the world is about.

Speaker 2 So those words that were there, far-right and conspiracy theorists, those were, in your view, the wrong words and that the critics of Wikipedia had a point.

Speaker 3 Well, I don't, it depends on what they depends on the specific criticism.

Speaker 3 So if the criticism is this word appeared on this page for 17 minutes, I'm like, you know what, that's you got to understand how Wikipedia works. It's a process, it's a discourse, it's a dialogue.

Speaker 3 But to the extent that he was called a conspiracy theorist by prominent people, that's part of his history. That's part of what's there.

Speaker 3 And Wikipedia shouldn't necessarily call him that, but we should definitely document all of that.

Speaker 2 You mentioned Elon Musk, who's come after Wikipedia. He calls it Wokeopedia.

Speaker 2 He's now trying to start his own version of Wikipedia called Gracopedia, and he says it's going to strip out ideological bias.

Speaker 2 I wonder what you think attacks like his do for people's trust in your platform writ large.

Speaker 2 Because as we've seen in the journalism space, if enough outside actors are telling people not to trust something, they won't.

Speaker 3 Well, you know, it's very hard to say.

Speaker 3 I mean, I think for

Speaker 3 many people,

Speaker 3 their level of trust in Elon Musk is extremely low because he says wild things all the time. So to that extent, you know,

Speaker 3

when he attacks us, people donate more money. So, you know, that's not my favorite way of raising money.

But the truth is, a lot of people are responding very negatively to that behavior.

Speaker 3 One of the things I do say in the book, and I've said to Elon Musk, is that type of attack is

Speaker 3 counterproductive, even if you agree with Elon Musk, because to the extent that he has convinced people falsely that Wikipedia has been taken over by woke activists, then two things happen.

Speaker 3 Your kind and thoughtful conservatives, who we very much welcome, and we want more people

Speaker 3 who are thoughtful and intellectual and maybe disagree about various aspects of the spirit of our times, come and join us and let's make Wikipedia better.

Speaker 3 But if those people think, oh, no, it's just going to be a bunch of crazy woke activists, they're going to go away.

Speaker 3 And then on the other side, the crazy woke activists are going to be like, great, I found my home. I don't have to worry about whatever.

Speaker 3 I can come and write rants against the things I hate in the world. We don't really want them either.

Speaker 2 You said you talked to Elon Musk about this. When did you talk to him about it? And what was that conversation like?

Speaker 3 I mean, we've had various conversations over the years.

Speaker 3 You know, he texts me sometimes. I text him sometimes.

Speaker 3 He's much more respectful and quiet in private, but that you would expect. It's got a big public persona.

Speaker 2 When was the last time you had that exchange?

Speaker 3 That's a good question.

Speaker 3

I don't know. I think the morning after the last election, he texted me that morning.

I congratulated him.

Speaker 2 Obviously, the debate that happened more recently was because of the hand gesture that he made that

Speaker 2 was

Speaker 2 interpreted in different ways.

Speaker 2 And he was upset in the way that it had been characterized on Wikipedia.

Speaker 3

Yeah, I heard from him after that. I mean, in that case, I pushed back because I went to check, like, oh, what does Wikipedia say? And it was very matter-of-fact.

It said he made this gesture.

Speaker 3

It got a lot of news coverage. Many interpreted it as this, and he he denied that it was a Nazi salute.

That's the whole story. It's part of history.

Speaker 3 I don't see how you could be upset about it being presented in that way. If Wikipedia said, you know, Elon Musk is a Nazi, that would be really, really wrong.

Speaker 3 But to say, look, he did this gesture and it created a lot of attention and some people said it looked like a Nazi, da-da-da-da-da, yeah, that's great. That's what Wikipedia is.

Speaker 3 That's what it should do.

Speaker 2 Do you think Elon Musk is acting in good faith? You're saying that in private he's nice and cordial, but his public persona is very different.

Speaker 3 You know,

Speaker 3 I think it's a fool's errand to try and figure out what's going on in Elon Musk's mind, so I'm not going to try.

Speaker 2 I don't mean to press you on this. I'm just trying to refer to something that you said, which is people, human to human, are nice, right? That we're good, that we should assume good faith.

Speaker 2 And so you're saying that Elon one-on-one is lovely, but he is attacking your institution

Speaker 2 and potentially draining support for Wikipedia.

Speaker 3 Well, I mean,

Speaker 3 I don't think he has the power he thinks he has or that a lot of people think he has to damage Wikipedia. I mean, we'll be here in 100 years and he won't.

Speaker 3 So I think as long as we stay Wikipedia, people will still love us. They will say, you know what, Wikipedia is great.

Speaker 3 And all the noise in the world and all these people ranting, that's not really the real thing.

Speaker 3

The real thing is genuine human knowledge, genuine discourse, genuinely grappling with the difficult issues of our day. That's actually super valuable.

So there's a lot of noise in the world.

Speaker 3 I hope Elon will take another look and change his mind. That'd be great, but I would say that of anybody.

Speaker 3 And, you know, in the meantime, I don't think we need to obsess over it or worry that much about it.

Speaker 3 You know, we don't depend on him for funding. And yeah, there we are.

Speaker 2 I hear you saying that part of your strategy here is just to stay the course, do what Wikipedia does.

Speaker 2 Are there changes that you do think Wikipedia needs to make to stay accurate and relevant?

Speaker 3 Well, I think what we have to focus on when we think about the long run, we also have to keep up with technology.

Speaker 3 You know, we've got this rise of the large language models, which are an amazing but deeply flawed technology. And so the way I think about that is to say, okay, look, I know for a fact,

Speaker 3 like

Speaker 3 no AI today is competent to write a Wikipedia entry. It can do a passable job on a very big, famous topic, but anything slightly obscure and the hallucination problem is disastrous.

Speaker 3 But at the same time, I'm very interested in how can we use this technology to support our community. One idea is, you know, take a short entry and

Speaker 3 feed in the sources. Maybe it's only got five sources and it's short and just ask the AI, is there anything in the entry that's not supported by the sources?

Speaker 3 Or is there anything in the sources that could be in Wikipedia but isn't? And give me a couple suggestions if you can find anything. As I've played with that, it's pretty okay.

Speaker 3 It needs work and it's not perfect. But if we react with just like, oh my God, we hate AI, then we'll miss the opportunity to do that.

Speaker 3 And if we go crazy like, oh, we love AI and we start using it for everything,

Speaker 3 we're going to lose trust because we're going to include a lot of AI hallucinated errors and so on.

Speaker 2 I mean, that's interesting because Wikimedia writes this yearly global trends report on what might impact Wikipedia's work.

Speaker 2 And for 2025, it wrote, quote, we are seeing that low quality AI content is being churned out not just to spread false information, but as a get-rich-quick scheme, and it is overwhelming the internet.

Speaker 2 high-quality information that is reliably human-produced has become a dwindling and precious commodity. ⁇

Speaker 2 I read that crawlers from large language models have basically crashed your servers because they use so much of Wikipedia's content.

Speaker 2 And it did make me wonder, will people be using these large language models to answer their questions and not going to the source, which is you?

Speaker 3 Well, you know, this has been a question since the since they began we haven't seen any real evidence of that and i use uh ai personally quite a lot and i use it in a different way though different use cases and how um see i like to cook it's my hobby I fancy myself as being quite a good cook.

Speaker 3

And I will often ask ChatGPT for a recipe. I also ask it for links to websites with recipes.

It sometimes makes them up, so that's a bit hilarious.

Speaker 3 And I also suggest be careful using ChatGPT for cooking unless you actually already know how to cook, because when it's wrong, it's really wrong. But

Speaker 3

Wikipedia would be useless for that. Wikipedia doesn't have recipes.

It's a completely different realm of knowledge than encyclopedic knowledge. So yeah, I'm not that worried about it.

Speaker 3 I do worry about,

Speaker 3 you know, in

Speaker 3 this

Speaker 3 time when journalism has been under incredible financial pressure and there's a new competitor for journalism, which is sort of low-quality churned-out content produced for search engine optimization to compete with real human-written content.

Speaker 3 To the extent that that further undermines the ability for

Speaker 3 the business model of particularly local journalism is something that I'm very worried about. Then that's a big problem.

Speaker 3 And it's not directly about Wikipedia, but it is about, you know, it's very very cheap to generate very plausible text

Speaker 3 that yeah hmm that that doesn't seem good to me

Speaker 2 it definitely doesn't seem good to me either as a journalist I just recall that there was this hope that as the internet got flooded with garbage and this is even before AI this was just you know kind of troll farms and and clickbait that it would benefit trustworthy sources of information.

Speaker 2 And instead, we've seen that the opposite has happened. Wikipedia, news organizations, academic institutions, they're all struggling with the same thing.

Speaker 2 Why do you think that they are struggling in an era where they should be flourishing if what you say is true, that people ultimately do want

Speaker 2 to trust the information that they're getting?

Speaker 3 Well, I mean,

Speaker 3 I think that

Speaker 3 a big piece of it is that the news media has not done a very good job of sticking to the facts and avoiding bias.

Speaker 3 I think a lot of news media, not all of it, but a lot of news media has become more partisan. And there are reasons for it and it's short-termism.

Speaker 3 And you'll even see there's some stuff in the book about this,

Speaker 3 some arguments by some people in journalism that objectivity is, we should give up on it and be partisan and so on and so forth. I think that's a huge mistake.

Speaker 3 And I think there's lots of evidence for that.

Speaker 3 You know, Wikipedia is incredibly popular and that's one of the things people say about Wikipedia that they really value and they're really disappointed if they feel like we're biased and so on and so forth.

Speaker 3 So, I mean, I gave the example earlier because I live in the UK, I read these papers, but you know, if you look at the Telegraph and you look at The Guardian on an issue related to climate change, I can already tell you before we start reading which attitude is going to come from which paper.

Speaker 3 Neither of them is doing a very good job of saying, actually, it's not our job to be on one side of that issue or the other.

Speaker 3 It's our job to describe the facts and to understand the other side and so on and so forth.

Speaker 3 Returning to that value system is hugely important because otherwise, how are you going to get the trust of the public?

Speaker 2 I guess I'm surprised at you saying this because Wikipedia has been

Speaker 2 faced with similar attacks on its own credibility.

Speaker 2 And you say that you are neutral and credible and that the system that you employ is fair. And yet there are people who completely dispute that.

Speaker 2 And so I think what the response to what is a very common broadside against journalists and journalism in this era that they have taken aside, those of us on the inside would say it is part of a larger project of discrediting facts.

Speaker 2 And we've seen those attacks on Wikipedia, we've seen them on academic institutions, and we've seen them on the media. They are all part of the same thing.

Speaker 2 So I'd love you to tease out why it's unfair when it happens to Wikipedia, but it's fair when it happens to journalistic institutions.

Speaker 3 Well, it's either fair or unfair for both, depending on what the actual situation is.

Speaker 3 So, you know, there have been cases in the history of Wikipedia Wikipedia when somebody said to me, wow, Wikipedia is really biased on this topic.

Speaker 3 And I say the response should be, wow, let's check it out. Let's see.

Speaker 3

Let's try and do better. If we find that we have been biased in some area, then we need to do a better job.

And I think that for many outlets, that isn't happening.

Speaker 3 Instead, what's happening is pandering to an existing audience. And I understand why,

Speaker 3 the reason why that's happened. It has to do with the business model, has to do do with getting clicks online, and so on and so forth.

Speaker 3 It has to do with the fact that without sufficient financial resources, you have to kind of scrap for every penny you can get in the short run rather than saying, no, we're going to take the long view, even though a few people may cancel.

Speaker 3 And, you know, I just, I'm encouraging us all to say, you know what, let's double down on that.

Speaker 3 Let's really, really take very, very seriously the need to be trustworthy.

Speaker 2 After the break, Jimmy and I speak again about how he thinks part of Wikipedia's success is the fact that profit isn't even on the table.

Speaker 3 The most successful tweet I ever had, I think it was a New York Post journalist, tweeted to Elon, you should just buy Wikipedia when he was complaining something about Wikipedia, and I just wrote, not for sale.

Speaker 3 A great adventure always kicks off with a question: Where should we camp this weekend? Is there public land nearby? What's the safest way up?

Speaker 3 Curiosity propels us into bigger adventures, grander stories, and personal discovery.

Speaker 3 When you're ready to turn your curiosity into action, download Onyx to help you find a way.

Speaker 3 Learn more at onyxmaps.com.

Speaker 1 This podcast is sponsored by Talkspace. You know when you're really stressed or not feeling so great about your life or about yourself, talking to someone who understands can really help.

Speaker 1 But who is that person? How do you find them? Where do you even start? Talkspace. Talkspace makes it easy to get the support you need.

Speaker 1 With Talkspace, you can go online, answer a few questions about your preferences, and be matched with a therapist.

Speaker 1 And because you'll meet your therapist online, you don't have to take time off work or arrange childcare. You'll meet on your schedule, wherever you feel most at ease.

Speaker 1 If you're depressed, stressed, struggling with a relationship, or if you want some counseling for you and your partner, or just need a little extra one-on-one support, TalkSpace is here for you.

Speaker 1 Plus, TalkSpace works with most major insurers, and most insured members have a $0 copay. No insurance?

Speaker 2 No problem.

Speaker 1

Now get $80 off of your first month with promo code Space80 when you go to talkspace.com. Match with a licensed therapist today at talkspace.com.

Save $80 with code space80 at talkspace.com.

Speaker 3 Hi.

Speaker 3 Hello.

Speaker 2 So I was thinking about our first conversation, and I was thinking about the moment that Wikipedia was created in, a time before social media, before sort of the dramatic polarization that we've seen, before the political weaponization of the internet that we've seen.

Speaker 2 I'm still, after talking to you, sort of not sure

Speaker 2 that the lessons of how Wikipedia was created apply to today.

Speaker 2 And so I wanted to ask you, do you think Wikipedia could be created now and exist in the same way that it does?

Speaker 3

Yeah, I do. Yeah, I think it could.

And I actually think that the lessons are pretty timeless.

Speaker 3 At the same time, yeah, it's absolutely valid to acknowledge the internet is different now and there's new problems, new problems that come from social media and all the rest, and the, you know, the aggressively politicized culture wars that we live in.

Speaker 3 That is different, but I don't think that's a permanent change to humanity. I think we're just going through a really

Speaker 3 crazy era, and

Speaker 3 here we are.

Speaker 2 Why do you think the internet didn't go the way of Wikipedia? You know, collegial, working for the greater good, fun, nerdy, all the words that you you use to describe that moment of creation.

Speaker 3 Well, you know, the thing is, I'm old enough that I sort of grew up on the internet in the age of Usenet, which was this massive message board, kind of like Reddit today, except for not controlled by anyone because it was by design.

Speaker 3 distributed and uncontrollable, unmoderatable for the most part.

Speaker 3 And it was notoriously toxic.

Speaker 3 There was some skepticism then. And that was when it first

Speaker 3 was recognized, I suppose first recognized,

Speaker 3 that anonymity can be problematic, that people,

Speaker 3 behind an alias, behind their keyboards, no accountability, can be just really bad and really vicious. And that's when we first started seeing spam.

Speaker 3 I remember some of the early spam and everybody was like, oh my God, what's this? Spam, you know, it's terrible. So I think some of these things are just human issues.

Speaker 3 But now they've, you know, that's to a larger degree than then. We live online.

Speaker 2 It's in our pocket all the time.

Speaker 3 It's in our pocket all the time. Yeah.

Speaker 3 So obviously the impact is much more.

Speaker 2 I mean, I think I was thinking about Wikipedia in particular and maybe why it went a different way in that you chose at a certain point to make it a not-for-profit.

Speaker 2 You chose not to sort of capitalize on the success of Wikipedia.

Speaker 2 And it made me wonder about, you know, OpenAI started as an open source for the greater good project, kind of like Wikipedia, and they've now shifted into being a multi-billion dollar business.

Speaker 2 I'd love to know your thoughts on that shift for OpenAI, but more broadly, do you think that the money part of it also changed the equation?

Speaker 3

Yeah, I mean, I do think it made a difference in lots of ways. And I'm not against for-profit.

You know, I'm not, you know,

Speaker 3

there's nothing wrong with for-profit companies. But even as a non-profit, you do have to have a business model, so to speak.

You've got to figure out how you're going to pay the bills.

Speaker 3 And for Wikipedia, that's not too bad. The truth is we don't require billions and billions and billions of dollars in order to operate Wikipedia.

Speaker 3 It's, you know, we need servers and database, and we need to support the community and all these kinds of things. I would say, in terms of the development of Wikipedia and

Speaker 3 how we're so community-first and community-driven, you wouldn't really necessarily have that if the board were made up largely of investors who were worried about the profitability and things like that.

Speaker 3 Also, I think it's important today

Speaker 3 for our intellectual independence. We're under attack in various ways, as we've talked about.

Speaker 3 And, you know, what's interesting is, you know, one of the things that isn't going to happen, actually, the most successful tweet I ever had,

Speaker 3

I think it was a New York Post journalist tweeted to Elon, you should just buy Wikipedia when he was complaining something about Wikipedia. And I just wrote, not for sale.

That was very popular.

Speaker 3 But it isn't for sale. And, you know, I just thought, you know what? You know, I would like to imagine myself as the person who would say to Elon, no, thank you.

Speaker 3 for a $30 billion offer if I owned the whole thing. But would I, you know, actually 30 billion, you know, 30 million? Yeah, I'm not interested.

Speaker 3 30 billion, you know, and so that's not going to happen because we're a charity and I don't get paid and the board doesn't get paid and all of that.

Speaker 3 And I do think that's important for that independence that we're not, we don't think in those terms. We're not even interested in that.

Speaker 2 Since we last spoke, the co-founder of Wikipedia, Larry Sanger, has given an interview to Tucker Carlson that's getting a lot of attention here in the United States on the right.

Speaker 2 And he has had a lot to say about Wikipedia and not a lot of it's good. In the past, he's called it one of the most effective organs of establishment propaganda in history.

Speaker 2 And we should say that he believes Wikipedia has a liberal bias.

Speaker 2 And in this interview and on his ex-feed, he's advocating for what he's calling reforms to the site, which include reveal who Wikipedia's leaders are and abolish source blacklists.

Speaker 2 And I just wonder what you make of it.

Speaker 3 Yeah, I haven't watched it. I can't bear Tucker Carlson, so I'm going to have to just suck it up and watch us both.

Speaker 3 So I'm not, I can't speak to the specifics in that sense, but you know,

Speaker 3 the idea that

Speaker 3 everything is an equally valid source and that it's somehow wrong that Wikipedia tries to prioritize the mainstream media and quality newspapers, magazines, and make judgments about that is

Speaker 3

not something I can in any way apologize for. But you know, there's no question.

Like,

Speaker 3 one of my sort of fundamental beliefs is that Wikipedia should always stand ready to accept criticism and change.

Speaker 3 And so, to the extent that a criticism says Wikipedia is biased in a certain way, and that these are the flaws in the system, well, we should take that seriously.

Speaker 3 We should say, okay, like, is there a way to improve Wikipedia? Is our mix of editors right?

Speaker 3 At the same time, I also think, you know what? We're going to be here in 100 years and we're designing everything for the long haul.

Speaker 3 And

Speaker 3 the only way I think we can last that long is not by pandering to this sort of raging mob of the moment, but by

Speaker 3 maintaining our values, maintaining our trustworthiness,

Speaker 3 being serious about trying to make things better if we've got legitimate criticism.

Speaker 3 And so, you know, other than the fact that, okay, we're just going to do our thing and we're going to do it as well as we can, I don't know what else we can do.

Speaker 3 I think you and Larry

Speaker 2 did build something beautiful that has endured.

Speaker 2 I do wonder if it's going to be part of our future, because I feel some despair about where we're all headed and some fear.

Speaker 2 And I guess you'll just say that I have to trust that it's all going to end up okay.

Speaker 2 But I do worry that it might not.

Speaker 3 Yeah, I mean,

Speaker 3 there's so much right now to worry about.

Speaker 3 And, you know, I can't dismiss all that. I can try and cheer you up a little bit.

Speaker 3 But,

Speaker 3 you know, I, you know, we just saw Donald Trump talking about the enemy within and suggesting the military should be in cities doing what? Shooting people. He doesn't, I, it's unbelievable.

Speaker 3 On the other hand, I sort of think he's just blustering and being Donald Trump and all that.

Speaker 3 But you have to worry.

Speaker 2 That didn't cheer me up. I got to tell you right there, but that's

Speaker 3 fair enough.

Speaker 2 I got to tell you, like as a pep talk,

Speaker 3 pretty low, pretty low.

Speaker 3 I think you're going to be all right, but it's, yeah, it's a, it's a rough time.

Speaker 3 Okay.

Speaker 2 What was the last page you read on Wikipedia and what were you trying to find out?

Speaker 3 Oh, that's a good question. Can I take a second to look?

Speaker 2 Sure.

Speaker 3

Show full history. Search Wikipedia.

Now, I'm going to skip over list of Wikipedians by number of edits. That's just me doing work.

I'm going to look. Oh, I know.

This is fun.

Speaker 3 Admiral Sir Hugo Pearson, who died in 1912, used to own my house in the countryside.

Speaker 3 And I found this out, and there's a picture of him which I've I found on eBay and ordered and I was trying to remember something and there's nothing about my house in the article because he was there and then he moved away and uh but I love it and I'm thinking of making a

Speaker 3 replacing the AI voice assistant I use Alexa you know people use okay Google or whatever I want to make my own and I want to have it be a character and the character will be the ghost of Hugo Pearson.

Speaker 3

Anyway, that's what I was researching. I'm not sure I'll ever get around to it.

I'm like really busy promoting my book and things like that.

Speaker 3 But when I get spare time, I dream of sort of being a geek and I'm going to go home in February maybe and just work all month playing in my house.

Speaker 2 You are a geek in the best possible way. Thank you so much for your time.

Speaker 3

I really appreciate it. Thank you.

Yeah, it's been great.

Speaker 2 That's Jimmy Wales. His new book, The Seven Rules of Trust, a Blueprint for Building Things That Last, comes out October 28th.

Speaker 2 To watch this interview and many others, you can subscribe to our YouTube channel at youtube.com slash at symbol the interview podcast. This conversation was produced by Wyatt Orm.

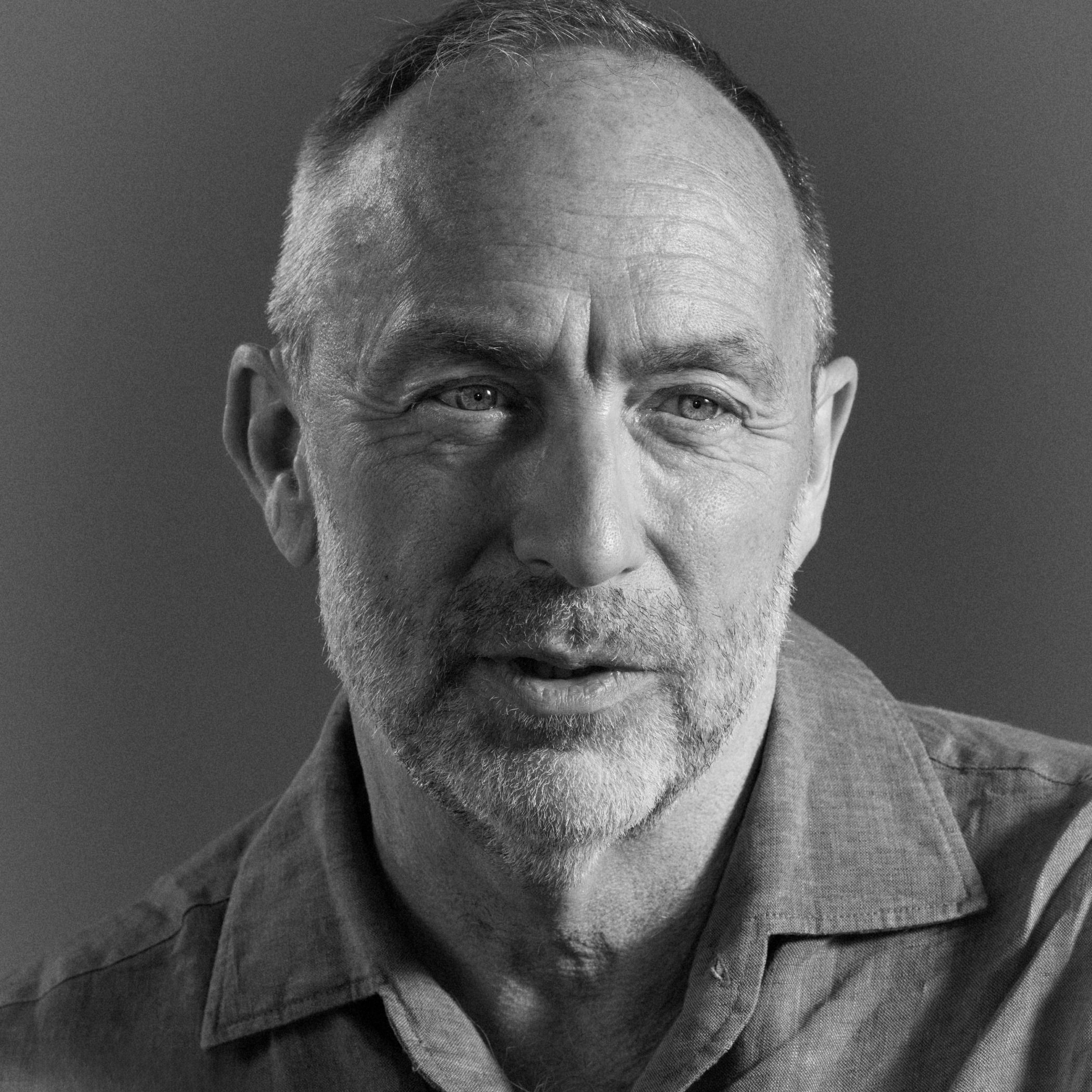

Speaker 2 It was edited by Annabelle Bacon, mixing by Afim Shapiro, original music by Dan Powell, Rowan Nemisto, and Marion Lozano. Photography by Philip Montgomery.

Speaker 2

Our senior booker is Priya Matthew, and Seth Kelly is our senior producer. Our executive producer is Allison Benedict.

Video of this interview was produced by Paola Newdorf.

Speaker 2

Cinematography by Zebediah Smith and Daniel Bateman. Audio by Sonia Herrero.

It was edited by Amy Marino. Brooke Minters is the executive producer of podcast video.

Speaker 2 Special thanks to Molly White, Rory Walsh, Renan Barelli, Jeffrey Miranda, Nick Pittman, Maddie Masiello, Jake Silverstein, Paula Schuman, and Sam Dolnick.

Speaker 2 Next week, David talks with Sir Anthony Hopkins about his new memoir and what he's learned in his 87 years.

Speaker 4 You get to a certain age in life, he's going through, you've got ambitions, you've got great dreams, and everything's fine. And then on the distant hill

Speaker 4 is death.

Speaker 4 And you think, well, now is the time to wake up and live.

Speaker 2 I'm Lulu Garcia Navarro, and this is the interview from the New York Times.

Speaker 1 Your home is an active investment, not a passive one. And with Rocket Mortgage, you can put your home equity to work right away.

Speaker 1 When you unlock your home equity, you unlock new doors for your family, renovations, extensions, even buying your next property.

Speaker 1

Get started today with smarter tools and guidance from real mortgage experts. Find out how at rocketmortgage.com.

Rocket Mortgage LLC, licensed in 50 states, NMLS Consumer Access.org, 3030.