#0006 Mark Zuckerberg

We break down the interview with Mark Zuckerberg

Clips used under fair use from JRE show #2255

Intro Credit - AlexGrohl:

https://www.patreon.com/alexgrohlmusic

Outro Credit - Soulful Jam Tracks: https://www.youtube.com/@soulfuljamtracks

Here are the links referenced in the show:

-

Federal Trade Commission v. Meta Platforms, Inc. - Wikipedia.

-

Meta Threatened With CFPB Lawsuit Over Financial Product Ads

-

Federal agency investigating how Meta uses consumer financial data for advertising

-

Commission fines Meta €797.72 million over abusive practices benefitting Facebook Marketplace

-

Facebook pays teens to install VPN that spies on them | TechCrunch

-

Elon Musk's X fact-checking system delayed Israel corrections for days

-

Twitter's algorithm favours right-leaning politics, research finds

-

Facebook’s system approved dehumanizing hate speech | PBS News

-

Metadata 102 — What is communications metadata and why do we care about it?

Episode Image is in the Public Domain

Press play and read along

Transcript

Speaker 1 Life with CIDP can be tough, but the Thrive Team, a specialized squad of experts, helps people living with CIDP make more room in their lives for joy. Watch Rare Well Done.

Speaker 2 An all-new reality series, Rare Well Done, offers help and hope to people across the country who live with the rare disease CIDP. Watch the latest episode now, exclusively on RarewellDone.com.

Speaker 5 The new Benedict Cumberbatch movie, The Thing with Feathers. Left to raise two young sons after his wife's unexpected death, dad's life begins to unravel.

Speaker 5 Grief is messy enough, but when it takes the form of an unhinged and unwanted house guest, Crow, taunting him from the shadows, things spiral out of control.

Speaker 5 Critics are calling it memorable and haunting. See The Thing with Feathers starting November 28th, only in theaters.

Speaker 4 On this episode, we cover the Joe Rogan Experience, episode number 2255, with Facebook CEO Mark Zuckerberg. The No Rogan Experience starts now.

Speaker 4

Welcome back to the show. This is a show where two podcasters with with no previous Rogan experience get to know Joe Rogan.

Joe Rogan's one of the most listened to people on the planet.

Speaker 4 His interviews and opinions influence millions of people, and he's regularly criticised for his views, often by people who've never actually listened to Rogan.

Speaker 4 So we listen, and where needed, we try to correct the record.

Speaker 4 It's a show for those who are curious about Joe Rogan, his guests and their claims, as well as for anybody who just wants to understand Joe Rogan's ever-growing media influence.

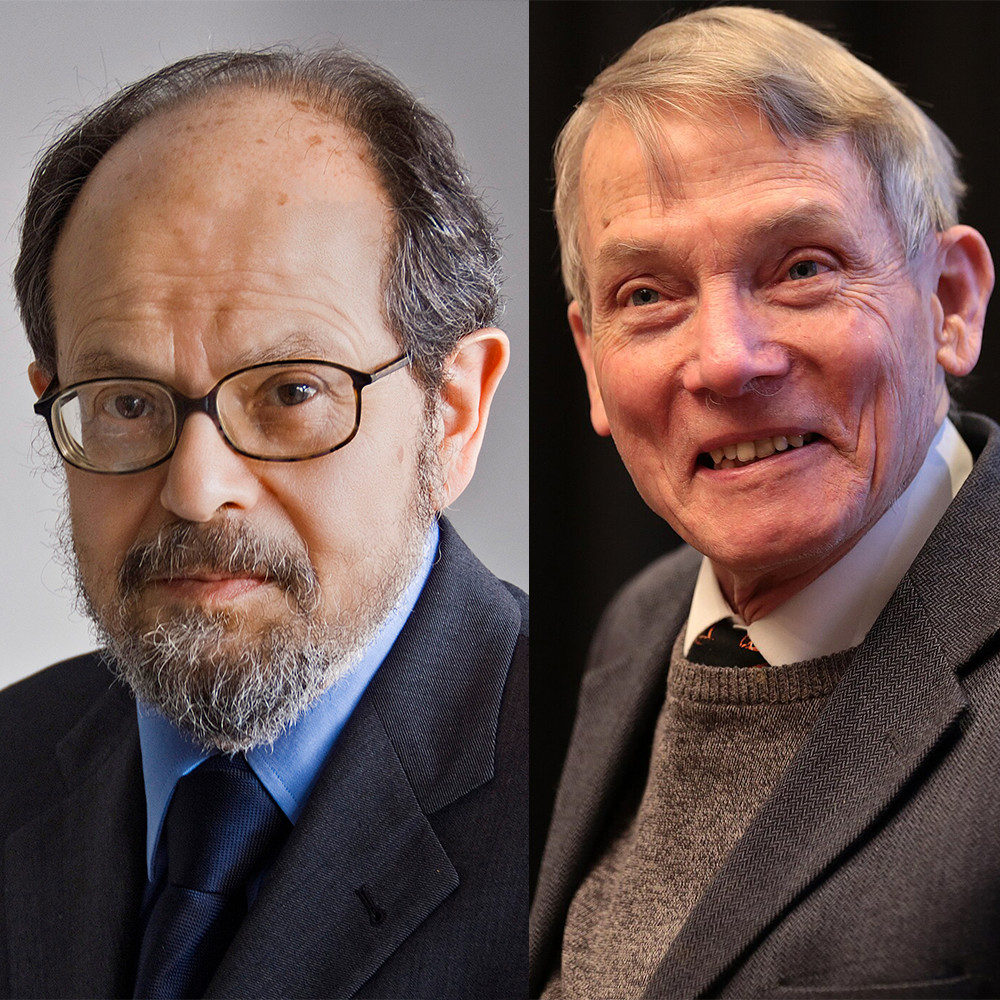

Speaker 4 I'm Michael Marshall and I'm joined by Cecil Cicarello. And today we're going to be covering Joe's January 2025 interview with Mark Zuckerberg, which has now been viewed to date 8 million times.

Speaker 4 So, Cecil, how did Joe introduce Mark in the show?

Speaker 6 We should mention, too, that that doesn't include Spotify, right? No,

Speaker 6 that's just YouTube.

Speaker 6 So, Mark Zuckerberg, according to the show notes, is chief executive of Meta Platforms Inc., the company behind Facebook, Instagram, Threads, WhatsApp, MetaQuest, Ray-Ban, Meta Smart Glasses, Orion Augmented Reality Glasses, and other digital platforms, devices, and services.

Speaker 4 Okay, so is there anything else relevant about Mark Zuckerberg that you think we should know going into this?

Speaker 6 There's quite a bit, actually. So there's an ongoing antitrust court case brought by the Federal Trade Commission, the FTC, against Facebook and the parent company Meta.

Speaker 6 The lawsuit alleges that Meta accumulated monopoly power via anti-competitive mergers with the suit centering on acquisitions of instagram and whatsapp the trial is scheduled to start this upcoming april quote meta ceo mark zuckerberg has personally and repeatedly thwarted initiatives meant to improve the well-being of teens on facebook and instagram at times directly overruling some of his most senior lieutenants according to internal communications made public as part of an ongoing lawsuit against the company.

Speaker 6 The lawsuit filed originally in Massachusetts allegedly shows that Zuckerberg ignored or shut down top executives, including Instagram CEO Adam Moseri and president of global affairs Nick Clegg, who had asked Zuckerberg to do more to protect the 30 million teens who use the Instagram platform in the United States.

Speaker 6 And also here in, and I'm going to include also a link to that in the show notes.

Speaker 6 And in 2020, Mark published an op-ed in the Financial Times entitled, Big Tech Needs More Regulation, where he said, quote, we need more oversight and accountability.

Speaker 6 People need to feel that global technology platforms answer to someone. So regulation should hold companies accountable when they make mistakes.

Speaker 6 Companies like mine also need better oversight when we make decisions, end quote.

Speaker 4

Oh, so he was saying that in 2020. Well, in 2024, he's got a different view of things.

Funny how

Speaker 4 that works. Feels relevant.

Speaker 6 It's a little different take now, Marsh. A little bit.

Speaker 4 So what did Joe and Mark talk about on this episode?

Speaker 6 You know, this conversation, you know, unlike the ones that we have been listening to that really range and they change topics every few moments, this one was very different.

Speaker 6 This conversation didn't have as many detours.

Speaker 6 In fact, they talk a lot about content moderation policies at Facebook. They get into a discussion about censorship and how that relates to content moderation.

Speaker 6 This moves to a lot of back and forth about government pressure and the role of government in speech.

Speaker 6 And then they talk about martial arts for a little bit, talk about hunting.

Speaker 6 They shift back to an AI discussion that eventually leads to Joe asking open-ended questions about why Apple is so bad and Mark just willing to fill in any blanks that Joe leaves.

Speaker 4 Okay, well, it sounds like we've got a lot to get to. But before we do that, we do want to say a huge thank you to our top tier patrons.

Speaker 4

These are the folks who signed up for the Area 51 all-access pass level. And those are Chonkat Chicago, Am I a Robot, Capture Says No, but Maintenance Records says yes, and Fred R.

Gruthius.

Speaker 4 So those three, they took out a subscription at patreon.com slash no Rogan. And you can too.

Speaker 4 Every single patron gets early access to each episode, as well as a special patron-only bonus segment each week.

Speaker 4 This week, we're going to be touching on Joe's medical advice and Mark's desire to become a cultural elite with masculine energy. So you can check that out at patreon.com forward slash no Rogan.

Speaker 4 But for now, let's hear all about Facebook's persecution by the US government in our main event.

Speaker 6 All right, so we're jumping into the main event here. And like Marsh said, this is a lot about Facebook's persecution by the government.

Speaker 6 Here, this is Mark coming on to talk about his trials and tribulations with the United States government, very specifically with the Biden administration. So

Speaker 6 we're going to play a bunch of clips based on that. The first clip is

Speaker 6 talking about, it sort of starts, I cut a little piece out where Joe is asking Mark, what started the pathway towards increasing censorship. And this is Mark's answer.

Speaker 3 Like I was saying, I think you start one of these if you care about giving people a voice. You know, I wasn't too deep on our content policies for like the first 10 years of the company.

Speaker 3 It was just kind of well known across the company that we were trying to give people the ability to share as much as possible. And issues would come up, practical issues, right?

Speaker 3 So if someone's getting bullied, for example, we'd deal with that. We put in place systems to fight bullying.

Speaker 3 If someone is saying, hey,

Speaker 3 someone's pirating copyrighted content on the service, it's like, okay, we'll build controls to make it so we'll find IP-protected content. But it was really in the last 10 years

Speaker 3

that people started pushing for like ideological-based censorship. And I think it was two main events that really triggered this.

In 2016, there was the election of President Trump,

Speaker 3 also coincided with basically Brexit and the EU and sort of the fragmentation of the EU. And then, you know, in 2020, there was COVID.

Speaker 3 And I think that those were basically these two events where for the first time,

Speaker 3 we just faced this massive, massive institutional pressure to

Speaker 3 basically start censoring content on ideological grounds.

Speaker 4 To me, right out of the gear here, the framing of this seems pretty disingenuous because when it comes to COVID, the idea of censorship, for me, that wasn't about ideology.

Speaker 4 It was about what's actually true and what isn't true during a deadly pandemic. This wasn't about left-right ideology or anything like that.

Speaker 4 It was like, is this true about this virus that is killing people or not? That's what kind of moderation was going on here.

Speaker 6 a lot of things too that could be influencing zuckerberg's change of heart on this too marsh i mean one of the things that happened right before he changed his policies was he was threatened by trump that if he didn't fall in line he was going to be put in prison for life this is a quote directly from trump's truth social election fraudsters at a level never seen before and they will be sent to prison for long periods of time we already know who you are don't do it zucker bucks be careful end quote so he threatened him on TrueSocial.

Speaker 6 He also, in a book he wrote,

Speaker 6

didn't write, but it's a picture book with a bunch of captions in it. He said this.

Now, quote, he told me there was nobody like Trump.

Speaker 6 Now he's referring to Zuckerberg on Facebook, but at the same time, and for whatever reason, steered it against me. We are watching him closely.

Speaker 6 And if he does anything illegal this time, he will spend the rest of his life in prison, as will others who cheat on the 2024 presidential election.

Speaker 6 That feels like something that might motivate someone to change their content policies if they're being threatened by the current, the new current administration that they'll spend the rest of their life in prison.

Speaker 4 Yeah, absolutely.

Speaker 4 And we touched on it in the opening that you found Mark Zuckerberg arguing in 2020 when Joe Biden was coming into the White House that his, that companies like his need more moderation.

Speaker 4 But by this point, when this interview is given, he knows that Trump is coming at the White House. He knows Trump has said these things.

Speaker 4 And surprise, surprise, Mark Zuckerberg's opinion on what needs to happen has completely changed. So yeah, you can sort of see what might be motivating him here.

Speaker 6 Yeah. And also too, you know, we've got to mention very specifically that Zuckerberg made a shit ton of money when Facebook was at its worst, when it was at its most combative.

Speaker 6 I'll put a link in the show notes to articles that talk about how Facebook was using its algorithm to put you into positions where you disagreed with things so that you would spend more time and you would give them more of your attention and you would be available more for ads.

Speaker 6 It's a real simple equation and they followed it a lot early on in their days and then they got called out for it. But this could be a great return to that and a lot of money.

Speaker 4 Yeah, absolutely. And so when he was talking about like Brexit and 2016, at the time, that's the kind of thing that was going on.

Speaker 4 And he's arguing that, well, this was an ideal ideological push for censorship.

Speaker 4 But during the time of Brexit, Facebook wasn't really doing censorship, which is why lies like Turkey is just about to join the EU, that was one of the arguments being pushed against us being in the EU here here in the UK, because Turkey is right about to join.

Speaker 4 Next year, Turkey will be allowed to join, and however many millions of people will be able to come over to the UK legally. It's not just that those lies were allowed.

Speaker 4

Facebook were actively promoting those in paid adverts. So they were making money on that.

This was a money maker. This wasn't, there was no ideological censorship going on.

Speaker 4 We were just, people were asking Facebook to stop actively promoting things that weren't factually true.

Speaker 6 And speaking of not factually true, here's a clip about fact-checking and flat earth.

Speaker 3 We're like, all right, we're just gonna have this system where there's these third-party fact checkers and they can check the worst of the worst stuff, right?

Speaker 3 So things that are very clear hoaxes, that there's like, it's not like, like, we're not parsing speech about whether something is slightly true or slightly false, like Earth is flat,

Speaker 3 you know, things like that, right? So that was sort of the original intent.

Speaker 4 And so I think it's really interesting that he brings up the flat earth as kind of an example of like, that's the egregious stuff that they were doing fact checks on.

Speaker 4 Because to be completely clear, Facebook did did a terrible job of even managing at all the whole flat earth situation i spent a lot of time around the flat earthers i spent years watching the flat earth movement grow it was one of the big investigations that i was doing in my day job facebook were actively promoting the flat earth to people it was saying well if you're interested in this stuff over here we think you might be interested in joining this flat earth group and they did the same thing with q anon they were actually targeting q anon at people who were part of yoga groups saying well if you're into like a wellness lifestyle kind of area some people who were into that area also like QAnon, so maybe you should join this QAnon group and be involved.

Speaker 4 And they did that not because they wanted to push that content.

Speaker 4 They did it because they wanted you to be using Facebook more, to be in conversations, to be engaged with the platform and spending time on. They didn't care what you were doing.

Speaker 4 So basically, between Facebook and YouTube, you can account for the entire growth of the flat earth movement and then the QAnon movement.

Speaker 4 So Facebook were doing a terrible job at the time, but this is the time that Mark Zuckerberg is saying was an example of them doing it right.

Speaker 6 All right. So here's more fact-checking discussion.

Speaker 3 You know, it was just never accepted by people broadly. I think people just felt like the fact-checkers were too biased.

Speaker 3 Not necessarily even so much in what they ruled, although sometimes I think people would disagree with that.

Speaker 3 A lot of the time, it was just what types of things they chose to even go and fact-check in the first place. So

Speaker 3 I kind of think like after having gone through that whole exercise, It um, I don't know, it's something out of like, you know, 1984, one of these books where it's just like, it really is a slippery slope.

Speaker 3 And it just got to a point where it's just, okay, this is destroying so much trust, especially in the United States, to have this program.

Speaker 4 So I think this is a really interesting framing device from Zuckerberg as to what was actually going on, because he's sort of painting it here like the fact-checking was what was destroying trust.

Speaker 4 But that isn't what was happening here.

Speaker 4 What essentially I think is happening is this is an attempt to make it seem like Facebook, an attempt by Zuckerberg to make it seem like Facebook isn't a publisher.

Speaker 4 Like, oh, we're just a medium, we just let anybody say anything.

Speaker 4 We're not the media, we don't get to decide what's true and what isn't. But the thing is, Facebook are making decisions all the time about who gets to see what you say and who doesn't.

Speaker 4 You know, that's how it serves you ads, it's how it recommends you into groups, it's how it hides one post of yours while promoting another and drives you towards kind of engagement and argument and conflict and things like that.

Speaker 4

So it is making editorial decisions all the time about what your experience is like on the platform. And as a result, that makes them a publisher.

And being a publisher comes with responsibility.

Speaker 4 You know, it's not Orwellian to say that publishers shouldn't be involved in publishing lies and untruth. It's literally the opposite of Orwellian to say that publishers shouldn't be publishing lies.

Speaker 4 And so what Mark's trying to do is trying to move himself and his company out of the space of publishing and just saying, we're just the wall. We're not responsible for what's written on it.

Speaker 4 But you are because you're choosing which bits people see and which bits people don't.

Speaker 6 Next bit is COVID and the shift to COVID-19 and content moderation around that.

Speaker 3 The Supreme Court has this clear precedent that's like, all right, you can't yell fire in a crowded theater. There are times when if there's an emergency,

Speaker 3

your ability to speak can temporarily be curtailed in order to get an emergency under control. So I was sympathetic to that at the beginning of COVID.

It seemed like, okay, you have this virus.

Speaker 3 It seems like it's killing a lot of people. I don't know, like we didn't know at the time how dangerous it was going to be.

Speaker 3 So at the beginning, it kind of seemed like, okay, we should give a little bit of deference to the government and the health authorities on how we should play this.

Speaker 3 But when it went from, you know, two weeks to flatten the curve to,

Speaker 3 you know, and like in the beginning, it was like, okay, there aren't enough masks, masks aren't that important. To then it's like, oh no, you have to wear a mask.

Speaker 3 And you know, like everything was shifting around. I it should become very difficult to kind of follow.

Speaker 4 And this really hit the most extreme, I'd say, during it was during the biden administration when they were trying to roll out um the vaccine program so one thing i'd like to point out at this point listeners will be wondering where is joe you know we have a show all about joe rog and show we haven't like we haven't heard from joe almost at all here and that's because joe asked a question kind of early on about what happened with censorship and mark gives what seems to me a pretty prepared set of answers like it seems like there's been some pre-conversation about these are the things that mark is going to want to say so joe doesn't really ask follow-up questions.

Speaker 4

He's not interjecting with his own thoughts. He's letting Mark kind of put forward his entire case here.

And I think when it gets to this bit,

Speaker 4 in part, I'm sort of genuinely a little sympathetic here. It must genuinely have been quite hard as Facebook at the start of the pandemic.

Speaker 4 You know, how do you police misinformation when what we know is shifting because we keep getting more information? A brand new virus has evolved. We don't know its parameters.

Speaker 4

We don't know how to protect ourselves. Right in those early days, we had no idea of how deadly it could be, how it was spreading.

There was this huge set of unknowns.

Speaker 4 And so policing misinformation in that space is going to be really difficult because you can't quite tell what genuine information is.

Speaker 4

We haven't got a good understanding up front about the importance of masks. That information is going to change and what counters misinformation is going to change.

So I get that that is difficult.

Speaker 4 But this is also the kind of responsibility that goes in hand with running the world's largest media company or one of the world's largest media companies.

Speaker 4 Because what we have to remember during this, as Mark is talking about this he wasn't doing this job for free this wasn't a voluntary role that he'd taken up he wasn't doing it in his spare time he is very very handsomely rewarded for being able to navigate the difficult issues from time to time that's very much his job you know

Speaker 4 in return for being able to figure a way through these difficult issues you get many billions of dollars If you want to make the big money, maybe you've got to work out how to solve some of the big problems sometimes.

Speaker 4 And if you can't solve them, maybe you have to take responsibility.

Speaker 6 That's what the money's for yeah absolutely you know this is an opportunity for the to roll uphill but he doesn't care he wants to roll downhill and you're like no sorry you're making a lot of money make the decisions be the person who makes these decisions and own those those decisions and i do think that there was a bit there of facebook doing what they should have done, which is let's curtail as much misinformation about this stuff as possible.

Speaker 6 Let's align ourselves when we can early on with as much information from the World Health Organization, information from the CDC, information with a, you know, a wide range, a large percentage of doctors.

Speaker 6 What would a large percentage of doctors say about this information? And anything else, we might not want to put out as much. And I think maybe initially there was probably something like that.

Speaker 6 But if you do that, suddenly you turn into a,

Speaker 6 you get demonized by a side that wants to be anti-vax, doesn't want any, they're the libertarians of medicine. They don't want anybody to tell them they have to wear a mask, et cetera, et cetera.

Speaker 6 And then he's losing people. So the most important thing, and again, we really need to pay attention to the profit motive, just like you suggest, right?

Speaker 6

You know, you're suggesting his profit is important, but I think the profit motive of Facebook is so important. We need to pay attention to that.

Well, how many people can we keep on Facebook?

Speaker 6 How many people can we keep on there as long as possible?

Speaker 6 And if you can make sure that there's stuff that I see as a believer in science, as someone who recognizes that science is going to be the way out of this, this predicament that we're in, this pandemic, I'm going to see stuff on Facebook and it's going to make me mad.

Speaker 6

And I'm going to want to comment. I'm going to want to share it and say, don't listen to this stuff.

And

Speaker 6 it's going to produce engagement and it's going to put eyes on screens, which is exactly what he wanted. So

Speaker 6 again, I've got to make sure that we always keep in sight this profit motive.

Speaker 4 Yeah, yeah, completely. I mean, the decisions that are best for public health are antithetical to a business structure that relies on conflict and getting people to argue online.

Speaker 4 So in those moments where he was taking, where Facebook was being asked to take measures that would be more

Speaker 4 protective people, that was inevitably going to affect the bottom line for the company.

Speaker 6 All right, next clip is, here's a discussion on how adversarial the Biden administration was, according to Zuckerberg.

Speaker 3 I'm generally pretty pro rolling out vaccines. I think on balance, the vaccines are more positive than negative.

Speaker 3 But I think that while they're trying to push that program, they also tried to censor anyone who is basically arguing against it.

Speaker 3 And they pushed us super hard to take down things that were honestly were true, right? I mean, they basically pushed us and said, you know, anything that

Speaker 3 says that vaccines might have side effects, you basically need to take down. And I was just like, well, we're not going to do that.

Speaker 6 There is a big difference, I think, between saying something simple like, you know, vaccines can have negative side effects.

Speaker 6 And those negative side effects are very small, but it's something to think, something to think about.

Speaker 6 Instead of, if you take the COVID vaccine, you could, you're going to be sterile or you could die in a year.

Speaker 6 You know, we're going to have these commercials where it's going to be like a mesothelioma commercial or an asbestos commercial where you're going to have a class action lawsuit if you take this vaccine.

Speaker 6

That's a totally different thing. That is spreading misinformation.

Those are two. I mean, you know, this is a severe health crisis that's going on.

Speaker 6 And if you're going to send out misinformation during that, that's a different thing than just saying something like he's suggesting.

Speaker 6 And the example that he's going to use is not just simple, oh, there's vaccine side effects.

Speaker 4 Yeah, absolutely. And it's like sort of saying, if you as a news page, all you ever did was report on the times that when someone gets into a car crash, they get injured by their seatbelt.

Speaker 4

That's the only stories you ever put out. It would make it look like seatbelts were really, really dangerous.

Great point.

Speaker 4 When actually seatbelts are protecting people all the time, but you're overemphasizing the outliers and that could put people off doing the kinds of things that would save them and save their lives.

Speaker 4 So it's sort of in that space.

Speaker 4 But what's interesting to me is what he's saying is, you know, the Biden administration asked them to take down this stuff that was that were real about side effects and they said no and they didn't take it down.

Speaker 4 So like, how is this? It's an issue that maybe the US government shouldn't have been asking, but

Speaker 4 this isn't a question of Facebook being so bullied by the US government because they said no and nothing happened.

Speaker 4 So, is that really why Facebook's changing their policy that they had moderation policies, but they felt they were too stringent?

Speaker 4 Because sometimes the US government would come to them and say, take this down, and they'd be able to say no, and nothing would happen.

Speaker 4 Is it that, or is it the Trump threats that you mentioned earlier? Yeah, about Trump saying, you'll be, we'll be coming for you, we'll send you to prison.

Speaker 4 Now, Trump's not going to send Zuckerberg to prison for this, but what he can do is turn

Speaker 4

his various departments of the government onto Zuckerberg, onto Facebook, onto Meta. It can make sure that those court cases go ahead.

It can make rules that make it difficult for Meta to operate.

Speaker 4 So there could be repercussions to being on the wrong side of Trump's government.

Speaker 4 And it feels like that's maybe more of a motivation for Mark's change of mind here than what he's actually blaming, which is Biden's government.

Speaker 4 Someone in Biden's administration asked me to do a thing and I said no and nothing bad happened.

Speaker 6 It's such a right. You're absolutely right.

Speaker 6

Like the outcome of this was nothing. And so you changed, you decided to change your entire content moderation policy because once in a while you got a phone call you didn't like.

Come on, man. Yeah.

Speaker 4 And we can say maybe those phone calls, you know, those phone calls probably shouldn't have happened. And he talks about someone from Biden's government like shouting and swearing at him and things.

Speaker 4 And we can say that is overreach, but it's overreach that had no effect. So pretty clearly, whatever was in place was robust enough to stand up to those very weak challenges.

Speaker 6 All right. Now, here is Joe talking about how hard it must be to moderate content.

Speaker 3 I want you to understand that whether it's YouTube or all these, whatever place that you think is doing something that's awful, it's good that you speak because this is how things get changed, and this is how people find out that people are upset about content moderation and censorship.

Speaker 3

But moderating at scale is insane. Yeah.

It's insane. Yeah.

What we were talking the other day about the number of videos that go up every hour on YouTube, and it's bananas. It's bananas.

Speaker 3 That's like To try to get a human being that is reasonable,

Speaker 3

logical, and objective that's going to analyze every video, it's virtually impossible. It's not possible.

So you've got to use a bunch of tools. You're going to get a bunch of things wrong.

Speaker 3 And you have also people reporting things. And how much is that going to affect things?

Speaker 3 You could have mass reporting because you have bad actors. You have some corporation that decides we're going to attack this video because it's bad for us, get it taken down.

Speaker 3

There's so much going on. Just I want to put that in people's heads before we go on.

Like, understand the kind of numbers that we're talking about here.

Speaker 3 Now understand you have the pandemic, and then you have the administration that's doing something where I think they crossed the line, where it gets really weird, where they're saying what you're saying.

Speaker 3 They were trying to get you to take down vaccine side effects, which is just crazy.

Speaker 4 For me, what's interesting about this is this is a very different side of Joe Rorgan to what we've heard elsewhere. This sort of feels like Joe is making Mark's arguments for him.

Speaker 4 This doesn't really feel like

Speaker 4 a conversation that's happening in the moment.

Speaker 4 It sort of feels a bit like they've discussed this beforehand, that these are the concerns, and now Joe is parroting what he's been told by Mark, even in the interview with Mark.

Speaker 4 So it's almost that we have one and a half marks giving Facebook's point of view in this interview, which is therefore not an interview.

Speaker 4 It's basically a press conference about how great Facebook is at this point.

Speaker 6 Yeah, no, you're not wrong.

Speaker 6 It sounds like Facebook talking points. I mean, it really sounds like what they would say to you if you were to ask about their content moderation policy.

Speaker 6

It seems like you would get this in a letter or something. And think about this, Marsh.

We listened to him talk to Evan Hafer.

Speaker 6 And in the conversation with Evan Hafer, he came off in the exact opposite position.

Speaker 6 He was him, him and Evan Hafer were talking about how ridiculous it is that these Second Amendment people who have these gun video stations and these gun video or gun video and gun photo Instagram accounts, how they're constantly getting taken taken down, they're getting shadow banned, et cetera, et cetera.

Speaker 6 And it's ridiculous. And this is a protected right and we should be able to do this.

Speaker 6 And that's an example of Joe going against exactly what he's promoting here, which is it's hard to decide what's good and what's bad for a large group of people.

Speaker 6 And sometimes bots we create to make these decisions sometimes don't make the right decisions. And you and I have experienced that too, where we'll talk about something in a skeptical light.

Speaker 6 We'll talk about anti-vaxing, for instance, on YouTube. And our entire video will get taken down.

Speaker 6 They'll say, no, you're promoting anti-vaxxer or you're promoting conspiracies when you talk about conspiracy theory and you're debunking it. So we've experienced that too.

Speaker 6 But it's funny to see him on the opposite side of that. And that I thought was a really interesting change in a couple of days.

Speaker 6 I mean, 30 or 60 days, he's sort of flip-flopped on that, changed his mind.

Speaker 4 And it feels a lot like it's because he's in the room with Mark Zuckerberg that his opinions are just blowing in the wind of whichever direction the person he's talking to wants, which is, you know, that can happen.

Speaker 4 We all get influenced by the the conversations we're in. But Joe and his fans see him as this

Speaker 4 able to stand, you know, this independent stand-up to the man, you know, not going to be sort of swayed by corporate speak kind of guy. Like he's just asking questions that every one of us might ask.

Speaker 4 But here he's got an opportunity to ask those kind of questions. And instead, what he's doing is, as you say, parroting the Facebook talking points with seemingly no interrogation of that.

Speaker 4 It feels very sort of serving to the corporation, which should be a surprise to people who see Joe as this outsider who's willing to stand up and say anything to anyone.

Speaker 6 And it's interesting too that they're both, both of these guys are sort of on the creator freedom side of that equation in some ways, where they're talking about how instead of the platform responsibility side, I see it as two sort of sides, right?

Speaker 6 There's a creator freedom side and a platform responsibility side. Zuckerberg doesn't want responsibility.

Speaker 6 He wants to make sure that there's more stuff out there so people can get mad about it, but he also wants to make sure that he doesn't, that all responsibility just flows off him like water off a duck's back.

Speaker 6 And Joe's on the other side too, because he's also monetizing his content and doesn't want to see it taken down, etc.

Speaker 6 So it's an interesting thing that they're both on the same side of this, even though they're technically on both, on different sides when it comes to who loads the content and who hosts the content.

Speaker 4 But I think this is why, for me, I can understand why people are against, you know, government overreach and government censorship and government interference with content.

Speaker 4 I do understand those concerns, but this is the flip side of that, is that you're right, both the platform and the creator want the benefits, but don't want the responsibilities.

Speaker 4 There's no way that in any perfectly free system, Mark Zuckerberg would be coming along and saying, I think we should limit our ability to make money in order to make sure that nonsense, things that are deadly and dangerous, don't spread.

Speaker 4 I just don't think that is going to be

Speaker 4 a motivation that a business is going to willingly subscribe to unless there are reasons and incentives for them to do that.

Speaker 4 And that's kind of where a government role or an external kind of role comes in because people don't grasp responsibility willingly all the time, especially when there's a profit motive not to.

Speaker 6 Next clip is how people get information.

Speaker 3 So I think that what you're saying of, yeah, how do people get their information now? It's by sharing it online on social media.

Speaker 3

I think that that's just increasingly true. And my view at this point is like, all right, like we started off focused on free expression.

We kind of had this pressure tested over the last period.

Speaker 3

I feel like I just have a much greater command now of what I think the policies should be. And like, this is how it's going to be going forward.

And

Speaker 3 so

Speaker 3 I mean, at this point, I think, you know, I think a lot of people look at this as a purely political thing.

Speaker 3 You know, it's because they kind of look at the timing and they're like, hey, well, you're doing this right after the election.

Speaker 3

It's like, okay, I try not to change our content rules right in the middle of an election either. It's like, there's not like a great time to do this.

It's, you know,

Speaker 3 and you want to do it a year later.

Speaker 4 Yeah, so literally, is he not just saying here, look, I know my platform is the main way that lots of people get their information, which is why it's so important that I make less effort to check if that information is true.

Speaker 4

That's what he's arguing for here. It's the opposite.

It should be absolutely the opposite here.

Speaker 6 Exactly.

Speaker 4 And he brings up like elections and things.

Speaker 4 Just before this clip, in fact, he brought up elections in India as well, which if I were him, I wouldn't mention, if I was the CEO of Facebook, I wouldn't mention Indian elections, given that Facebook has been accused of literally enabling and benefiting from the rise of Narendra Modi's Hindu nationalist party, the Bharatiya Janata Party, in India ahead of the 2019 election.

Speaker 4 I think they had a major role in pushing that between Facebook and WhatsApp, and they were heavily criticized for the way the discourse went in that and Facebook's hand in that.

Speaker 4 So personally, I wouldn't be bringing that up if I was Mark Zuckerberg. I'd be leaving Indian elections out of things.

Speaker 6 You know, it's interesting to me.

Speaker 6 This is something that came up when I was thinking about not only his change in content moderation policies, which I think are going to drive a group of people to Facebook now that are going to be a little more right-wing.

Speaker 6 I think that you're going to see a lot more people who have left Facebook because of some content moderation policies that they decided to put in place because they probably didn't want to get any sternly worded letters from the Biden administration.

Speaker 6 They probably changed their content moderation policies when Biden came in.

Speaker 6 I think we all need to understand that this is a profit-motive business and the easier waters they're in, the better off they are. So aligning with the current administration is the best thing to do.

Speaker 6 That's why I think Zuckerberg writes a op-ed in 2020 that says we need more content moderation.

Speaker 6 And here he is on Joe Rogan's show saying we don't need content moderation based on when a new president gets put in office. So I think we need to pay attention to that.

Speaker 6 But, you know, I think we're going to see more people that are. I think on the left can leave Facebook.

Speaker 6 They're going to be leaving Facebook behind or spending less time on Facebook, but we're going to see a lot more people on the right.

Speaker 6 That may cause a problem for Zuckerberg because there won't be any antagonism. And one of the things I thought, and this is, this is Cecil sort of dipping in a little bit into conspiracy.

Speaker 6 So Marsh, you can reel me in if you want. But one of the things I thought was they also just recently introduced AI profiles and they pulled them off the market fast because people hated it, right?

Speaker 6 So immediately everybody hated these AI profiles. They pulled them off and they didn't really say that they were pulling them off forever.

Speaker 6 It sounded like they were pulling them off in one of the articles I read because of bugs that they had, that they weren't, they were pulling them off because they didn't like how they performed.

Speaker 6 But I will say, what better way to get

Speaker 6 someone who is sort of a low computer literacy user on a platform where they want to be angry and they can argue with a computer for a while?

Speaker 6 You know, I feel like there might be a strategy here to keep you on, especially low computer literacy people on a platform that, you know, again, is now leaning towards more, more right-leaning content.

Speaker 6 This, I think, could be a huge cash cow for him if he implements this in the right way.

Speaker 4

Yeah, and do you know, I think, I think you may be onto something there. I think it doesn't even have to be a political thing.

I mean, one of the things we noticed,

Speaker 4 we shared last week's episode, the Mel Gibson episode.

Speaker 4 I shared it to my Facebook, and in the comments on Facebook, there were people pretending to be Mel Gibson, saying, I am Mel Gibson's official page. Come chat to me on Telegram.

Speaker 4 And so, I think we already have a world in which Facebook is filled with inauthentic accounts, bot accounts, AI accounts.

Speaker 4 And what they're doing at the moment is looking for those triggers where people with low, people who are not internet savvy, people with low literacy when it comes to how the internet works and how scans work, it's already looking for the triggers that will fish those people in.

Speaker 4 And I think the more we see a relaxing and an abdication of content moderation from Facebook, it's not just the arguments, the political arguments that are going to

Speaker 4 sort of go up in flames. It's also going to be the people who get caught up by scams because no scams would otherwise be moderated out.

Speaker 4 We weren't seeing people pretending to be Mel Gibson on Facebook pages anywhere near as much two, three, four years ago. But as

Speaker 4 the strings have loosened around that, because there's very little profit motivation in stopping that, other than

Speaker 4 it tarnishes the reputation of your product somewhat, there's very little other reason to do it.

Speaker 4 So as long as the reputational damage doesn't outweigh the amount of money that you're saving by not having that moderation, it's still financially a worthwhile thing to do.

Speaker 4 And so it'd be interesting where this goes from here.

Speaker 6

I don't get an opportunity to do this very often. So I'm going to do it right now.

I'm going to correct you, Marsh. They didn't say that they wanted a message.

Speaker 6

They actually implored you, that bot implored you to massage them. They said, massage me, please.

So I just want to, the point corrected on the show,

Speaker 6 Marsh was explicitly asked to massage a bot.

Speaker 4 Yes. Massage Mel Gibson on Telegram is what my Facebook page was telling me.

Speaker 6 Next up, a short bit about the Consumer Finance Protection Bureau, which is a reoccurring theme on Joe's program.

Speaker 3 The tough thing with politics is that there's like well, when you say who someone's coming after, are you referring to kind of the government and investigations and all that?

Speaker 3 I mean, so the issue is that there's the there's what specific thing an agency might be looking into you for, and then there's like the underlying political motivation, which is like, why do the people who are running this thing hate you?

Speaker 3 And I think that those can often be two very different things.

Speaker 3 So, and we had organizations that were looking into us that were like not really involved with social media. Like, I think like the CFPB, like this financial, I don't even know what it stands for.

Speaker 3 It's the financial organization

Speaker 3

that Elizabeth Warren had set up. Oh, great.

And it's basically, it's like, we're not a bank. The deep bank is like

Speaker 3 sexual. Yeah, no, so

Speaker 3 we're not a bank, right?

Speaker 4 right it's like like what what does meta have to do with this but they kind of found some theory that they wanted to investigate and it's like okay clearly they were trying really hard right to like to like find find some theory but it like i don't know it just it kind of like throughout the the the the the party and the government there was just sort of i don't know if it's i don't know how this stuff works i mean i've never been in government i don't know if it's like a directive or it's just like a quiet consensus that like we don't like these guys they're not doing what we want we're gonna punish them is that what it is marsh yeah so this is marks playing the oh shucks i'm just a a lowly ceo billionaire how would i know about how the regulations that apply to me how would i know anything about the agencies that do that or the things that they do yeah i think this is really disingenuous so what the other thing that's interesting is you know we've had the mark we've already done the mark andrierson conversation i'm so glad we covered this because we heard joe being primed on debanking in that episode and here it is coming up so joe's already ready you can hear him he says oh the debanking uh agency so he now knows all about it.

Speaker 4 He's willing to just take whatever Mark said, Mark Andriessen said last time, apply it to Mark Zuckerberg.

Speaker 4 I wonder whether that's a total coincidence or whether there's some kind of interesting timing that maybe Zuckerberg is aware that that conversation's happened or, you know,

Speaker 4 they're all billionaire mates in Silicon Valley.

Speaker 4 One thing that is also worth pointing out,

Speaker 4 at least Zuckerberg does know it's the organization that Elizabeth Warren set up.

Speaker 4 I know we mentioned on a previous show that Joe described it and Mark Andriessen described it as her being the head of it.

Speaker 4 And we didn't correct on that because there were so many other things that we were criticizing the conversation for.

Speaker 4 Elizabeth Warren wasn't the head of it, she set it up and was never kind of running it, but nevertheless, she was the person who initially kind of founded it.

Speaker 4 But just like we heard with Mark Andriessen, we've got Zuckerberg here pretending that he thinks this is all about like political differences and persecution for political opinion and not for shady business practices.

Speaker 4 But if he is genuinely unsure that he, if he genuinely has no idea what the CFPB stands for or why they might possibly be looking into facebook he could read the filing and that information is going to be in the filing there yeah you know you could just find out what that acronym is just by reading the lawsuit you were given yeah because like if he if he genuinely doesn't know what he's being investigated for i if either that's true and therefore he's bad at his job because the book stopped with him he should be he would be made aware of this so he's clearly not paid attention or it isn't true and he's trying to pretend to joel that it is he's trying to pretend to joe that oh i have no idea idea they're just coming for me.

Speaker 4

But he's got the documents. He will know what this is for.

It seems very disingenuous to be painting this way. Yeah.

Speaker 6 And it sounds like you suggest, it sounds almost exactly what Mark Andreessen said.

Speaker 6 If you think back, Mark Andreessen was like, well,

Speaker 6

we kind of know how they do this. And this is how the government comes after you.

And you don't expect it. And they're sort of, they just sort of, they just sort of sick the government on you.

Speaker 6 And he had that sort of almost exact same tone even that Zuckerberg has.

Speaker 6 So my suspicion is is that Zuckerberg just listened to that episode and thought, or he, or he read a couple of the news articles because it was clipped all over. And

Speaker 6 he probably listened to the news articles and thought, oh my gosh, this is going to be super easy. All I got to do is mention this.

Speaker 6

And Joel will immediately fall on my side because he hates this idea of regulation that Mark has already implanted in his head. This would be a super easy sell.

And he sells it.

Speaker 6

And Joe falls for it immediately. Just, oh, yeah, of course.

Yeah, sure. No problem.

Yeah. That's the debanking thing.

I hate it.

Speaker 4

Yeah, absolutely. There's just no interrogation.

There's no follow-up. It's like that, that

Speaker 4

has already been seeded and now it's kind of embedded. But if Mark isn't sure what Facebook is being investigated by the CFPB for, He could read Bloomberg Law.

There's a website which explains it.

Speaker 4 According to there, the CFPB staff informed Meta on September 18th that it's weighing potential legal action related to advertising for financial products on the company's platforms.

Speaker 4 So that's why the Consumer Financial Protection Bureau has come in is because Meta is allowing adverts for financial products and and are kind of involved there.

Speaker 4 We can also see on the record that it alleges that the company that Meta improperly obtained consumers' financial data from third parties and then put that into its massively profitable targeted advertising business.

Speaker 4 So, if it's obtaining data it shouldn't have access to and then using that to extra target its advertising to see who's going to be most vulnerable to, for example, this particular financial product.

Speaker 4 So, if you can see someone's financial records and say, you are in a lot of debt, I'm now going to target you with an advert for a loan company with incredibly high percentages because you're not.

Speaker 4 We've seen from the various information we have that maybe you're not fully savvy with how compound interest works and things like that, or maybe you're so desperate that you need that payday loan to get you to the next thing.

Speaker 4 Then they could micro-target in that way to find the most vulnerable people.

Speaker 4 These are the kinds of things that I think anybody else who knows the detail of this, if you explain this to someone, the average person would say, yeah, Facebook shouldn't be doing that.

Speaker 4 It shouldn't be looking into how much debt you're in and then using that to target predatory loans at you. The average person would say, that's a bad thing.

Speaker 4 But you can't portray it that way on George Shaw because you need everybody to be against these rules.

Speaker 4 So you have to sort of sell this untruth that it's, oh, it's just coming after Facebook's political opinions or they've got the wrong side of the government and not that they are being caught doing these shady practices.

Speaker 6 Yeah, be vague and you can win people over.

Speaker 4 Yeah.

Speaker 6 Zuckerberg's now going to make a plea that the U.S. should stand up for its companies.

Speaker 3 And really, when you think about it, the US government should be defending its companies, right? Not be the tip of the spear attacking its companies.

Speaker 3 So we talk about a lot: okay, what is the experience of,

Speaker 3 okay, if the US government comes after you. I think the real issue is that when the US government does that to its tech industry,

Speaker 3 it's basically just open season around the rest of the world.

Speaker 3

I mean, the EU, I pull these numbers. The EU has fined the tech companies more than $30 billion over over the last, I think it was like 10 or 20 years.

Holy shit. And shit.

Speaker 3 So when you think about it, like, okay, there's, it's like, you know, $100 million here, a couple billion dollars there.

Speaker 3

But what I think really adds up to is this is sort of like a kind of EU-wide policy for how they want to deal with American tech. It's almost like a tariff.

And I think the U.S.

Speaker 3 government basically gets to decide how are they going to deal with that, right? Because if the U.S. government,

Speaker 3 if some other country was screwing with another industry that we cared about, the U.S. government would probably find some way to put pressure on them.

Speaker 3

But I think what happened here is actually the complete opposite. The U.S.

government led the

Speaker 3 kind of attack against the companies, which then just made it so like the EU is basically and all these other places just free to just go to town on all the American companies and do whatever you want.

Speaker 4 Ah, can't America just defend the American companies? Isn't that what it's all there? Isn't that what the government's for, if not to defend corporations?

Speaker 6 Isn't that what Joe Rogan's all about, is having the big government coming in to defend corporations isn't that the whole point of the joe rogan show no it's not like he should be completely against this this should be everything that joe is against he he doesn't like big corporations he doesn't like big government but mark is arguing that government should actively be on the side of the corporations um so that this is just ridiculous this should be challenged and the fact that joe doesn't challenge this says an awful lot about how mark has got him into this space where he's going to just accept everything essentially yeah also defend their companies from what you know like that's that it sounds this sounds like mark andreessen again because mark andreessen was saying trump wants us to win he said that right well what does that even mean right trump wants us to win that sounds that sounds like something like a slogan rich people would sell to poor people who label themselves patriots right this is the exact same thing the u.s should defend its companies well what does that mean what are you talking about you got to talk if we're going to defend the company tell me what specific situation we are defending them from.

Speaker 6 Because those situations can change wildly based on what the corporation is actually doing.

Speaker 4

Yeah. And in terms of what Mark, what Facebook is currently requiring the government to defend it from, Mark doesn't go into details.

He kind of gets kind of loose around this.

Speaker 4 Oh, there's all these billions, tens of billions of dollars of fines over the last 10 or 20 years, but he doesn't go into details.

Speaker 4 So let's actually look at some of the cases that the EU have brought against

Speaker 4 Meta, against Facebook over the last few years. So in 2023, Meta was fined $1.2 billion for illegally transferring privacy data on millions of

Speaker 4 European users in a ruling where

Speaker 4 the judgment included that these illegal transfers of data were systematic, repetitive, and continuous. So there are rules around where your privacy data as a user can be kept.

Speaker 4 And Facebook were ignoring those laws and just moving the data around

Speaker 4 to places that

Speaker 4

you hadn't given consent consent for your data to be. And this is really important because your data is what Facebook makes money from.

Your data is what it uses to target

Speaker 4 advertisements. It's what it uses to find advertisers and say,

Speaker 4 it's good to be advertising here because we can find you, your exact customers. So when it comes to things like those lending loans, Once you have that data,

Speaker 4 you can see who exactly might need that kind of service and then target them. The EU was trying to protect your privacy data so that the corporation didn't have access to all your data.

Speaker 4 There was another one in 2024. Meta was accused of a massive illegal data processing by European consumer groups

Speaker 4 who said, quote, with its illegal practices, Meta fuels the surveillance-based ad system, which tracks customers online and gathers vast amounts of personal data for the purpose of showing them adverts.

Speaker 4 Do you want more adverts?

Speaker 4 Because what this was saying, the EU was saying, the way in which Facebook is serving you adverts is going against our rules as to how many adverts and how specific they can be.

Speaker 4 This is protecting consumers from predatory business practices.

Speaker 4 There was another fine a few years ago, EU fined Meta $792 million for abusive practices that benefited Facebook Marketplace.

Speaker 4

To say, if you sell through Facebook Marketplace, you will get all these extra kind of benefits that were monopolistic. And the EU said, no, we want free enterprise.

We want free competition.

Speaker 4 We don't want monopolies. And so it was fined three quarters of a billion dollars for that.

Speaker 4 And perhaps the most sinister of all of them, and this is one called, this is from 2019 called Project Atlas, was a program that Facebook ran.

Speaker 4 We did a segment on it called on Skeptics with a K, my other podcast about it, where Facebook had a second app that it was encouraging teens to install, which was basically a VPN that would spy on them at all times.

Speaker 4 Between 2016 and 2019, Facebook paid users between the age of 13 and 35, so users as young as 13, they paid them $20 a month plus some referral fees to sell their full privacy by installing this app onto their device, this Facebook research app.

Speaker 4 And according to a mobile security expert, users as young as 13 were being paid to give Facebook, quote, the ability to continuously collect private messages in social media apps, chats from instant messaging apps, not just Facebook, any instant messaging app on your phone.

Speaker 4 It was to give you access to that, you give Facebook access to your phone fully, including photos and videos sent to others, emails, web searches, web browsing activity, and even the ongoing location information by tapping into the feeds of any location tracking apps you you had installed.

Speaker 4 So it was paying users as young as 13 $20 a month to have a clone copy of everything they did on their phone. If you think about how many, say, 17 year olds were in their first relationship

Speaker 4 who were sending pictures to their partners. Now think of how many people who were maybe a little younger that who were sending pictures to their

Speaker 4 16 year olds sending pictures to the person they were dating. We're not going to condone it, but it would happen.

Speaker 4 Facebook was collecting those images.

Speaker 4 It wasn't looking to do that. It was trying to do research in order order to essentially try and combat Snapchat's rise to try and sort of learn how Snapchat was doing so well in that demographic.

Speaker 4 But Facebook was done for this. This was a big, big deal.

Speaker 4 So these are the cases that Mark Zuckerberg is saying, well, the US government should be defending us from the evil corporation, the evil other countries who just want to take us down.

Speaker 4 Mark Zuckerberg's arguing here, without telling you it's what he's arguing for, that the US government should be protecting a company that wants to pay teenagers for access to every piece of information, every photo, every video they ever send, and to collect all that and harvest it so they can be better at serving those kids ads.

Speaker 4 That's what was going on here.

Speaker 6 You know, when you list this litany of things that they've done that are, that are data breaches, essentially data breaches, reaching into demographics from all over, taking their data, targeting them with predatory ads, things like that.

Speaker 6

That's a horrible thing. And I think most people, when they hear that, would say, wow, that's some really shady business dealings.

That's really terrible.

Speaker 6 Maybe somebody should be taking them to court for things that they've done. But what he did was what Mark Andreessen did, which is they couched this ahead of time in free speech.

Speaker 6 Mark Andreessen did this when he was talking to Joe and he's saying, oh, these people are debanked. Oh, they're not debanked because they're risky crypto startups.

Speaker 6

They're debanked because they have the wrong opinions. And then he goes into, oh, but here's essentially why I care about this.

And it's a totally different reason why he cares about it.

Speaker 6 But he couches it initially in this, oh this is why they're debanked mark zuckerberg starts this conversation about free speech here's free speech free speech issues free speech issues content moderation how we should be making sure there's more speech how it's a it's a meritocracy it's it's a marketplace of ideas etc etc etc and i don't understand why the u.s government isn't defending us but he never once mentions it's about privacy data he makes you put the connection up that it's about free speech because he never puts a bridge between those two things instead your brain and joe's brain has to follow him.

Speaker 6 And the following is, well, I've got to attach this free speech thing we just talked about.

Speaker 4 Yeah, absolutely. And what he's doing is he knows

Speaker 4 there is a term you can use, which will shut down Joe's critical thinking in this conversation that will stop Joe asking any kind of critical follow-up questions.

Speaker 4 Once you frame it, as you say, as free speech, once that's in Joe's brain, he will not ask the kind of questions that a good interviewer, a curious interviewer might ask.

Speaker 4 It would have been very easy for Joe ahead of the show to be even tangentially aware of some of those cases that I brought up. We know he's talked to Mark ahead of time.

Speaker 4 He talked about the fact that they've had pre-conversations. It'd be dead easy for Joe to say, when Mark said, oh, there's all these different cases I don't even know what they're for.

Speaker 4 It'd be easy for Joe to say, Jamie, could you pull that up? Jamie, could you go and just Google why is Facebook being

Speaker 4 targeted by the EU? What are the cases from the EU? And that it found this.

Speaker 4 And if Joe was aware that that project Atlas, for example, that Facebook was gathering all the photos and videos of users as young as 13 and paying them for the privilege, I think Joe would be against that.

Speaker 4 I think Joe would say that's a bad thing, but he's not inquisitive enough to ask in the moment because Mark Zuckerberg has switched off that criticism by saying the magic words

Speaker 6

free speech muzzle. There you go.

That's all he's got to do is free speech muzzle on him.

Speaker 4 All right.

Speaker 6 Next clip is Joe.

Speaker 6 You know, this is a section we sort of shift here to talk about how community notes are going to be implemented on Facebook.

Speaker 3 But you do have to be careful about misinformation, and you do have to be careful about just outright lies and propaganda complaints, or propaganda campaigns, rather. And how do you differentiate?

Speaker 3 Well, I think that there are a couple of different things here. One is this is something where I think X and Twitter just did it better than us on fact-checking.

Speaker 3 We took the critique around fact-checking, sorry, around misinformation, we put in place this fact-checking program, which basically empowered these third-party fact-checkers.

Speaker 3 They could mark stuff false, and then we would downright it in the algorithm. I think what Twitter and X have done with Community notes, I think is just a better program.

Speaker 3 Rather than having a small number of fact checkers, you get the whole community to weigh in.

Speaker 3 When people usually disagree on something tend to agree on how they're voting on a note, that's a good sign to the community that there's actually like a broad consensus on this, and then you show it.

Speaker 3 And you're showing more information, not less, right? So you're not using the fact check as a signal to show less.

Speaker 3 You're using the community note to provide real context and show additional information. So I think that that's better.

Speaker 4 Yeah, funnily enough, Mark Zuckerberg thinks it's better that a solution that just happens to involve him spending less money on fact checkers and relying entirely on more engagement on the platform, which is obviously hugely in his interest to farm this all onto the users and to stop putting it on the people he pays.

Speaker 6 Yeah, that's a great point, Marsh.

Speaker 6 Joe is very much follow the money until a billionaire blows smoke up his ass, and then he's not anymore. He doesn't do that anymore.

Speaker 6 But really, if you just follow the money on this, this is 100% in his favor to do exactly what you say, which is lay off a group of his workers and then also create more animosity on his platform.

Speaker 6

Community notes, too. Let's just talk about this real quick.

One, he keeps on saying Twitter and X, which I think is really funny. Even Mark Zuckerberg doesn't want to call Twitter X.

Speaker 6 He keeps mentioning them to both both at the same time.

Speaker 6 But I want to say community notes are just shitty. They're not super great.

Speaker 6

They leave up false information. So the false information is still there.

It just has a note note underneath it. And that can sometimes have hateful information on it.

Speaker 6

It can be anti-trans information. You know, we found that people who are getting bullied online are more susceptible to self-harm.

And if you keep that stuff up there, that's not good for them.

Speaker 6 Even if there is some small community note under it, that's something we need to pay attention to.

Speaker 6 These are places that a lot of young people spend a lot of time, and they can be very influenced by a lot of this stuff.

Speaker 6 So I think it's a terrible change to not take down hurtful content, instead community note it. I think that's something that we should definitely be paying attention to.

Speaker 6 And also what happens when we start getting our information from places like Facebook, which is what he seems to address early on?

Speaker 6 He's like, oh, we're going to stop listening to media and start listening to Facebook.

Speaker 6 Well, if we don't have anybody out there, if we see especially a suppression by the government of information, especially in this government, how they've been very hostile to the media, what's going to happen?

Speaker 6

Our sort of collective IQ is going to go down. Our collective knowing of things is going to go down.

We're not going to know what's happening.

Speaker 6 We're going to be repeating false information and community noting false information as real if we don't know what reality is.

Speaker 4 Yeah, yeah, absolutely. We need journalists because one of the tenets of journalism is to have multiple sources, multiple lines of evidence that correlate and

Speaker 4 lead you to a conclusion. And what we've already seen, even in the episodes we've done so far, is it's much easier and quicker for you to

Speaker 4 do away with all of that and just throw out supposition.

Speaker 6 But unfortunately, that doesn't mean that it's true and it can lead you to to spread things that are really comprehensively untrue and i also one last piece community notes don't appear right away so what happens is yeah the bad information gets out there and it stays out there until enough people in the community note volunteer system say that's wrong and then they vote on it and figure out sort of what gets put underneath it but all that takes time and that thing is still visible and is still in the algorithm and is still getting published to people so you're spreading misinformation and then you're going back and changing it.

Speaker 6 And we've all heard that comment, which is, you know, the original story is on page one, but the correction is on page nine. And it's like, that's the same thing with community notes.

Speaker 6

The correction's on page nine. I might have saw that within the first 30 minutes it was up because it was viral.

And now I'll never see it again. And the community note that tells me it's false.

Speaker 6 So this next clip is talking about Hunter Biden's laptop.

Speaker 3 Well, getting back to the original point, this is why I think, you know, it makes sense to me that the government didn't want you to succeed and to have the sort of unchecked power that they perceived social media to

Speaker 3 have.

Speaker 3 And I think one of the benefits that we have now of the Trump administration is that they have clearly felt the repercussions of a limited amount of free speech, of free speech limitations, censorship, government overreach.

Speaker 3 If anybody saw it, look,

Speaker 3 I don't know what the actual impact of the Hunter Biden laptop story would have been. I don't know.

Speaker 3 But there's many people that think it probably amounted to millions of votes overall in the country of people that were on the fence, the people that weren't sure who they're going to vote for.

Speaker 3

If they found out the Hunter Biden laptop was real, they're like, oh, this is fucking, the family's fucking crazy. And they would have voted for Trump.

That's possibly real.

Speaker 3

And if that's possibly real, that could be defined as election interference. And all that stuff scares the shit out of me.

That kind of stuff scares the shit out of of me.

Speaker 3 When the government gets involved in what could be termed election interference, but through some weird loophole, it's legal.

Speaker 4 First of all, he talks about, you know, the government want you to shut down the unchecked power they perceived you to have.

Speaker 4 And he sort of says that in a way that he thinks it's bad that the government are trying to do that. I would say that I don't think social media companies should have unchecked power.

Speaker 4 I think that there should be limits on how much power a social media company should have. And I thought that Joe would think the same.

Speaker 4 You'd imagine that Joe would be against the idea of any corporation having no limits to how powerful or influential they could be.

Speaker 4 It seems like that's the kind of position Joe would normally take up, except when he sat down talking to a billionaire who wants that kind of power, who wants the power to make money at all costs.

Speaker 4 And then when it comes to the Hunter laptop story, like one of the reasons that that was a thing initially, because initially it was that, oh, there is, you know, this story isn't real and it wasn't kind of initially allowed to circulate, was because there were initially reports floating around that this was a piece of Russian propaganda.

Speaker 4 And And I don't think they were right in the actions that they took, but obviously, it's important to stop actual propaganda being fireholes at the electorate during an election because that's election interference when you allow foreign propaganda to do that.

Speaker 4 So there's always going to be some level of checks and balances on things that are suspected to be foreign propaganda. And in this case, it turns out it was his laptop.

Speaker 6 But because there was this initial report that actually this was going to be that there was a big, big, big, big, big piece of Russian propaganda coming, coming it's it's understandable that they were concerned about that because you want your elections to be free from the meddling of foreign propaganda from hostile states and I he is complaining and and and really sounding like he doesn't he's like oh my gosh election interference I can't believe that that would that feels like election interference and I'm thinking well my gosh are you you know this is cherry picking here's the thing man there's Shady phone calls that happened while Trump was in office, a fake elector scam.

Speaker 6 There was letters from the Justice Department sent out to all these different places.

Speaker 6 Like there was real election interference while Trump was in office and you seem to like, don't even care, but somehow this laptop gets put out there and you're, and you immediately think, oh, that's crazy election interference.

Speaker 6 And that's awful that, you know, it would have changed so many different people's votes.

Speaker 6 The other thing too is, you know, a lot of people keep talking about how, oh my gosh, it's a Hunter Biden thing. And one of the things is that.

Speaker 6 You know, the reason why they wanted a lot of that story pulled down is because it had pictures of Hunter Biden's dick.

Speaker 6 That's why, is because there was pornography and other things, it was unconsensual pornography, and it was put out on the internet all over the place and spread all over the place.

Speaker 6 And they didn't want it out there because it's, cause it's disgusting, because it's grotesque to share someone's intimate moments on video without their permission.

Speaker 6 And that's why there was a lot of pushback to this.

Speaker 6 And people seem to forget that, that that's why that's if you look at some of the, some of the requests, that's what literally most of the requests were about.

Speaker 4 Yeah, yeah, after the fact, certainly, I know that there were efforts initially to stop the spread of it before it was confirmed to be his laptop because it was suspected to be a piece of propaganda.

Speaker 4 So there was some initial kind of slowing of the spread, but then afterwards, the stuff that was removed was absolutely the revenge point aspect.

Speaker 4 People talk about the laptop like it was filled with all sorts of deeply incriminating evidence about

Speaker 4 huge amounts of money being made in all these dodgy kind of ways and fraud and things like that. And that just wasn't there.

Speaker 4 It was his laptop, but the amount of stuff that they think was on there just wasn't on there.

Speaker 6 All right, so this is the last section in the main segment. We're going to be talking about the Twitter files and congressional hearings.

Speaker 3 I do think that

Speaker 3 having people in the administration calling up the guys on our team and yelling at them and cursing and threatening repercussions if we don't take down things that are true is like, it's pretty bad.

Speaker 3

It sounds illegal. I would love to hear it.

I wish somebody recorded those conversations. Well, I mean, those would be bad.

I mean, again, it's great to listen to.

Speaker 3 Somebody could animate them, maybe polytunes.

Speaker 3 A lot of the material is public.

Speaker 3 I mean, Jim Jordan Jordan led this whole investigation in Congress.

Speaker 3 I mean, it was basically, I think about this as like, you know, what Elon did on the Twitter files when he took over that company, I think Jim Jordan basically did that for the rest of the industry with the congressional investigation that he did.

Speaker 3 And we just turned over all of the documents and everything that we had

Speaker 3 to them, and they basically put together this report.

Speaker 4 Yeah, just a reminder, you know, Mark is saying it's pretty bad that people in the administration called up and were yelling and cursing and threatening.

Speaker 4

And yeah, I think that is pretty bad, but nothing happened. They said, take it down.

Facebook said no, and nothing happened. So that's the real censorship we're talking about here.

Nothing happened.

Speaker 4 And then the Twitter files, again, that was much of a nothing essentially, wasn't it?

Speaker 6

Yeah, mostly nothing. And I think like what the Twitter files really essentially boiled down to was a gish gallop.

They dumped a ton of information out. There's a ton of information there.

Speaker 6 And then you have two pretty biased reporters talking about very specifically the pieces that they want to pull out of context and the pieces that they want to show the world.

Speaker 6 And what you're seeing is essentially a content moderation discussion by Twitter officials on what they're going to be taking down and communications with other people.

Speaker 6 If you're not involved in these conversations, sometimes they feel like, oh, you know, that feels like we're looking behind the curtain and it feels like maybe they're doing something shady when it's really just their job to make these decisions, right?

Speaker 6

This is their job. This is what they're paid to do.

So they make these decisions.

Speaker 6 Much of the Hunter Biden laptop was like we suggested it's non-consensual, intimate videos of him.

Speaker 6 And that's something that we really want to, you know, you, you want to make sure that you're, you're taking that down. And those were, they were getting those requests.

Speaker 6 And the one big piece of the, of the, of the Twitter files that everybody seems to forget is that Joe Biden was just a citizen at the time. That's it.

Speaker 6

He's Joe Biden, previous vice president, previous senator. That's what he is.

He's not president. He's not anybody.

Speaker 6 He's a guy guy who's running for office, sending a message as a private citizen and his campaign as a private citizen's campaign to say, hey, we would appreciate it if you took my son's dick off of Twitter.

Speaker 6 Is that possible? And then that's the real issue. And people are saying, oh, this is major

Speaker 6 overreach by the government. What government? He's just a guy.

Speaker 4 Yeah, I mean, you could, people would be able to push back on it and say, well, he's not just a guy in the sense that he's been in, you know, in power for so long. He's been in the Senate for so long.

Speaker 4